We analyzed 4 years of Gartner’s AI hype so you don’t make a bad investment in 2026

Gartner’s Hype Cycle is a well-known model that tries to map the chaos, but frankly, I don’t take it as gospel. It’s a flawed model, but a useful one for framing the conversation.

So, in this article, I’m using it as a lens. I’ll examine AI’s journey from 2022-2025 through this framework, and then layer on ChatGPT, Grok, Kimi K2, and Gemini’s market assessment (all of them made using Deep Reasoning options) to show what is coming next (according to the data these LLMs could access).

My goal is to give you a pragmatic perspective that helps you make smarter business decisions.

Don’t build AI in chaos

Key takeaways

|

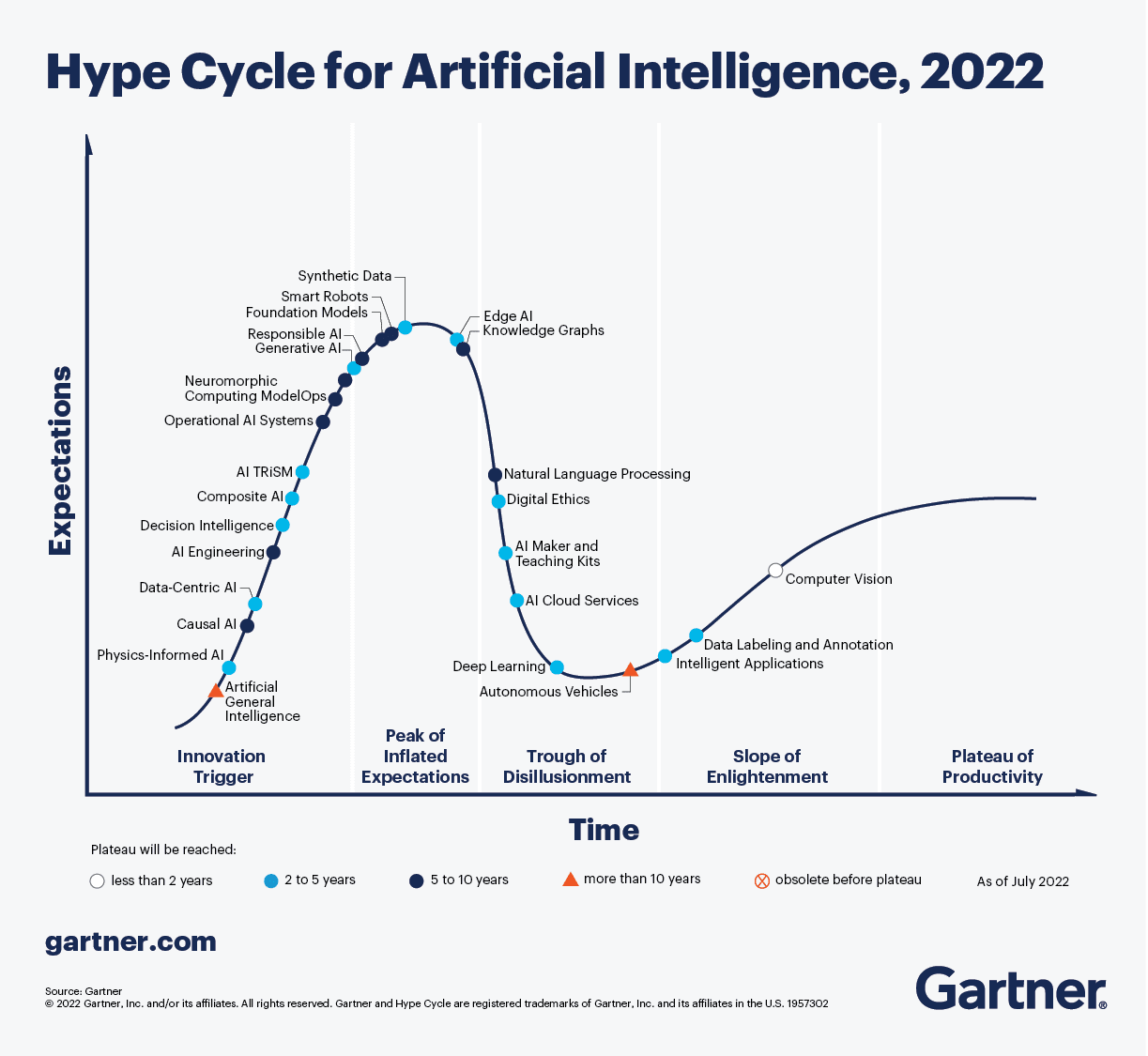

2022: Foundation Models

Source: What’s New in Artificial Intelligence from the 2022 Gartner Hype Cycle

What are the five phases of the AI Gartner Hype Cycle

Just months before ChatGPT changed everything, the AI world was surprisingly… sensible. The focus was on the hard, unglamorous work of making AI practical.

Gartner’s report from that year highlighted technologies for operationalizing AI. The main focus was on Composite AI (blending different AI techniques) and Decision Intelligence (improving organizational decision-making). The building block of the coming revolution, Foundation Models, was near its hype peak.

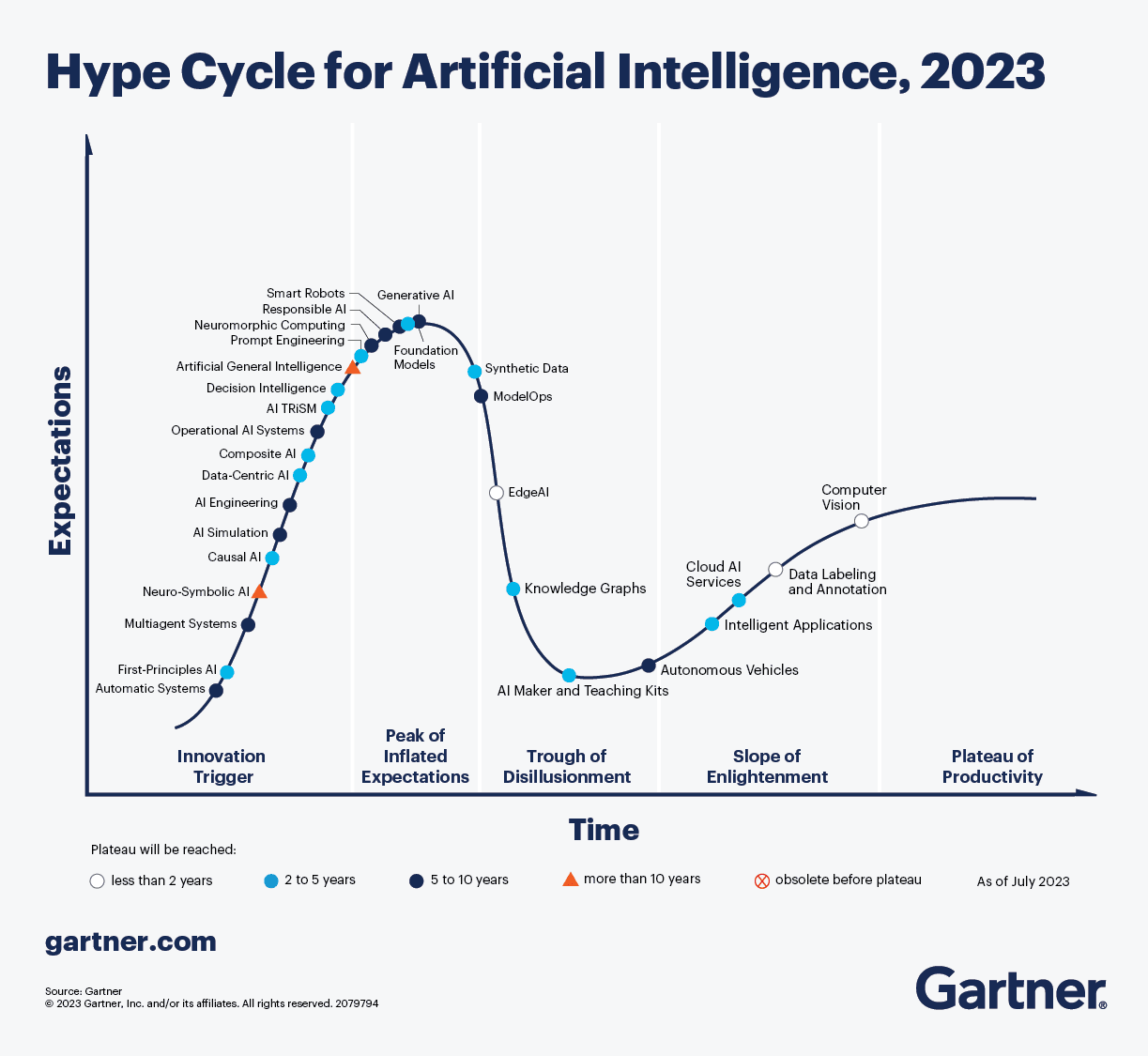

2023: Generative AI

Source: What’s New in Artificial Intelligence from the 2023 Gartner Hype Cycle

Fueled by the viral success of ChatGPT and Midjourney, 2023 saw Generative AI leap straight to the “Peak of Inflated Expectations”. The hype was huge, but as Gartner noted, it was based on cherry-picked examples, not a realistic view of its business-readiness.

This singular focus acted like a black hole for market attention. Other promising technologies, like Edge AI and Knowledge Graphs, were sidelined and began sliding into the “Trough of Disillusionment”.

It was a perfect example of how one massively popular solution – in this case GenAI – can become a center of gravity, pulling the entire industry into its orbit. The question was no longer if a company was doing AI, but what it was doing with GenAI.

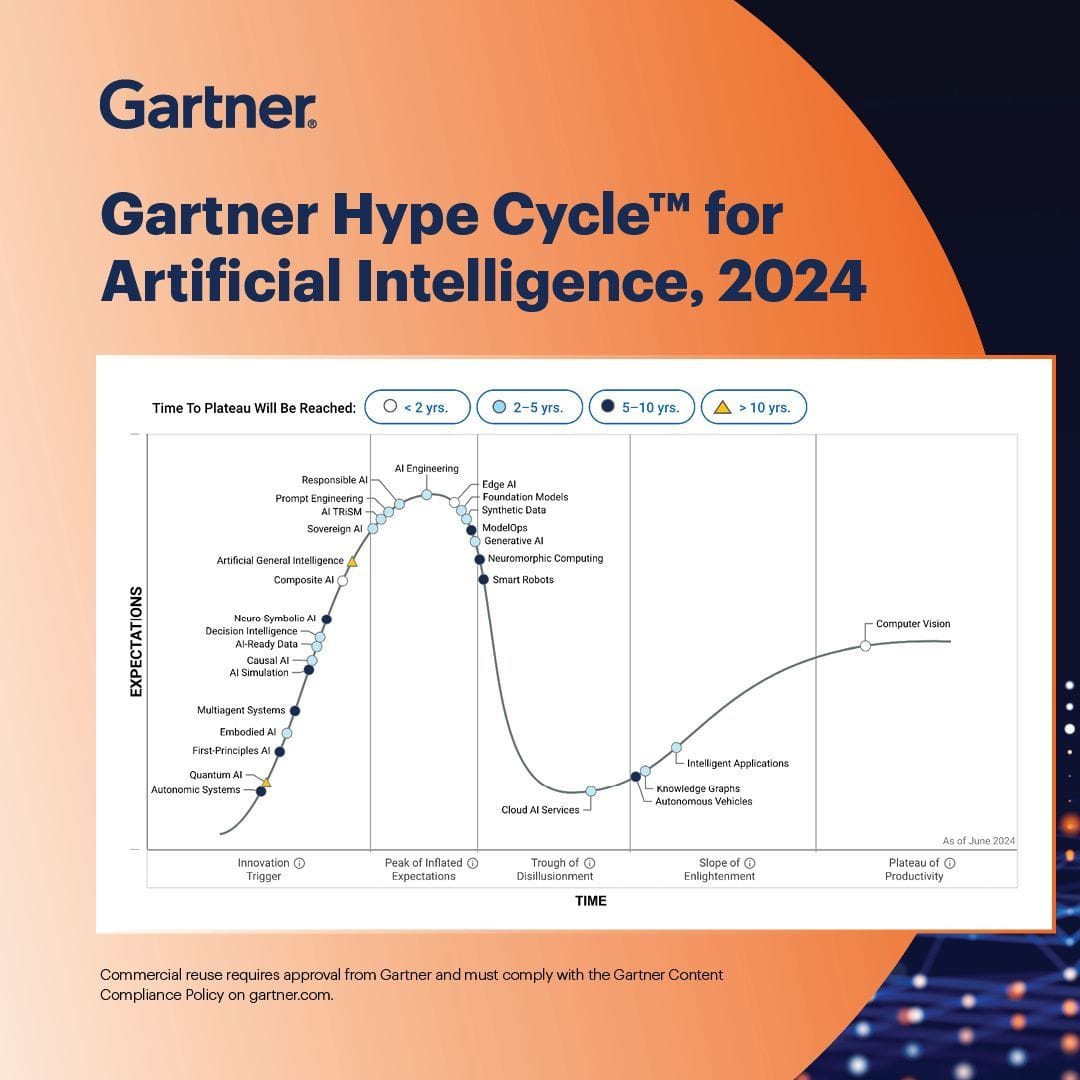

2024: AI Engineering

Source: Gartner’s Instagram Post

After the party comes the cleanup. 2024 was the year of the great AI hangover, where the conversation shifted from “what is GenAI?” to a much tougher question: “How do we actually implement, scale, and profit from this?”

As a result, Generative AI officially passed its peak and began the long slide into the “Trough of Disillusionment”. In its place, a new discipline climbed to the very top of the hype cycle: AI Engineering. The focus was now on building the robust MLOps, DataOps, and delivery platforms required to make AI work reliably at scale.

Tellingly, Knowledge Graphs made a strong comeback, moving to the “Slope of Enlightenment”. The market realized that to combat AI hallucinations, they needed to ground their models in verifiable facts. It was a classic post-hype pivot: we had our hype moment, but then we realized that it would take much more time and effort to be more than just a funny gimmick to share on LinkedIn. In other words, the world realized that for business cases GenAI… just didn’t work.

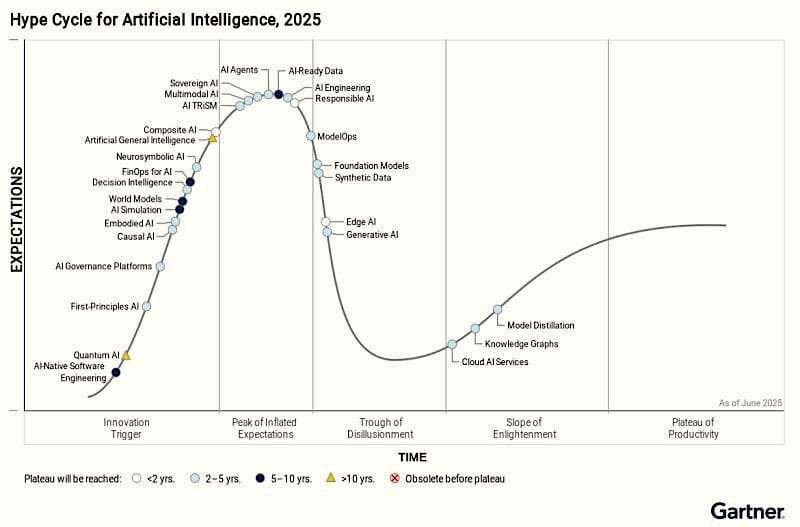

2025: What is the state of AI in 2025 per Gartner’s Hype Cycle?

Source: Jan Beger’s LinkedIn post

GenAI has slid into the Trough of Disillusionment. No wonder, Gen AI projects burnt average $1.9 M per initiative yet left<30 % of CEOs satisfied with ROI. Organizations now pivot from “wow” demos to hard problems: proving value, taming hallucinations, and aligning with regulations.

Meanwhile, AI-ready data and AI agents* sit at the Peak of Inflated Expectations. This shows two new bottlenecks: 57 % of companies admit their data isn’t ready for AI, and agents raise governance & security nightmares (access control, hallucinations).

The quiet winners are AI Engineering and ModelOps – the foundational disciplines that turn scattered pilots into scalable, auditable production systems – and the brand-new entry AI-native software engineering, which promises to augment (and gradually replace) large chunks of the SDLC with AI-first tooling.

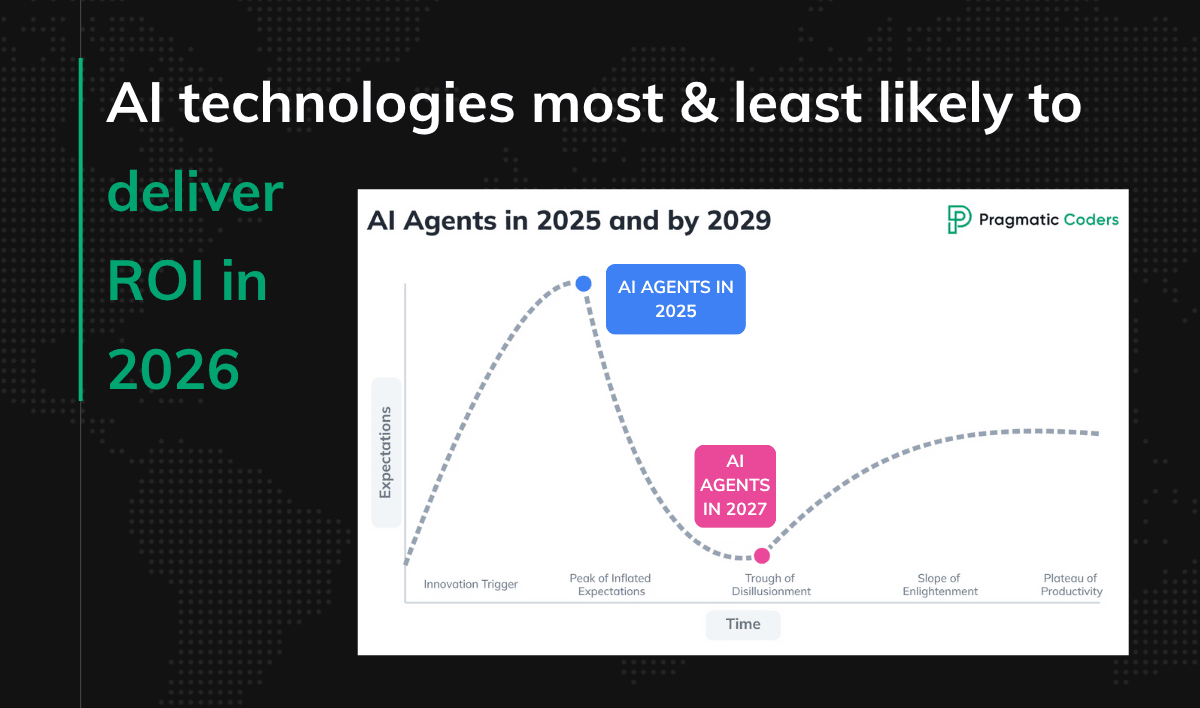

*I’d say that right now, at least as of August 2025 in Pragmatic Coders, people are getting more and more overstimulated with the term “AI agents” popping out EVERYWHERE. AI agent is a vague term, and since everyone has its own definition of it, are we sure we’re on the same page? Actually, I think we’re already sliding down the Trough of Disillusionment.

What are the key AI technology trends for 2025 and beyond?

| Technology | 2025 Position | 2026 | 2027 | 2028 | 2029 |

|---|---|---|---|---|---|

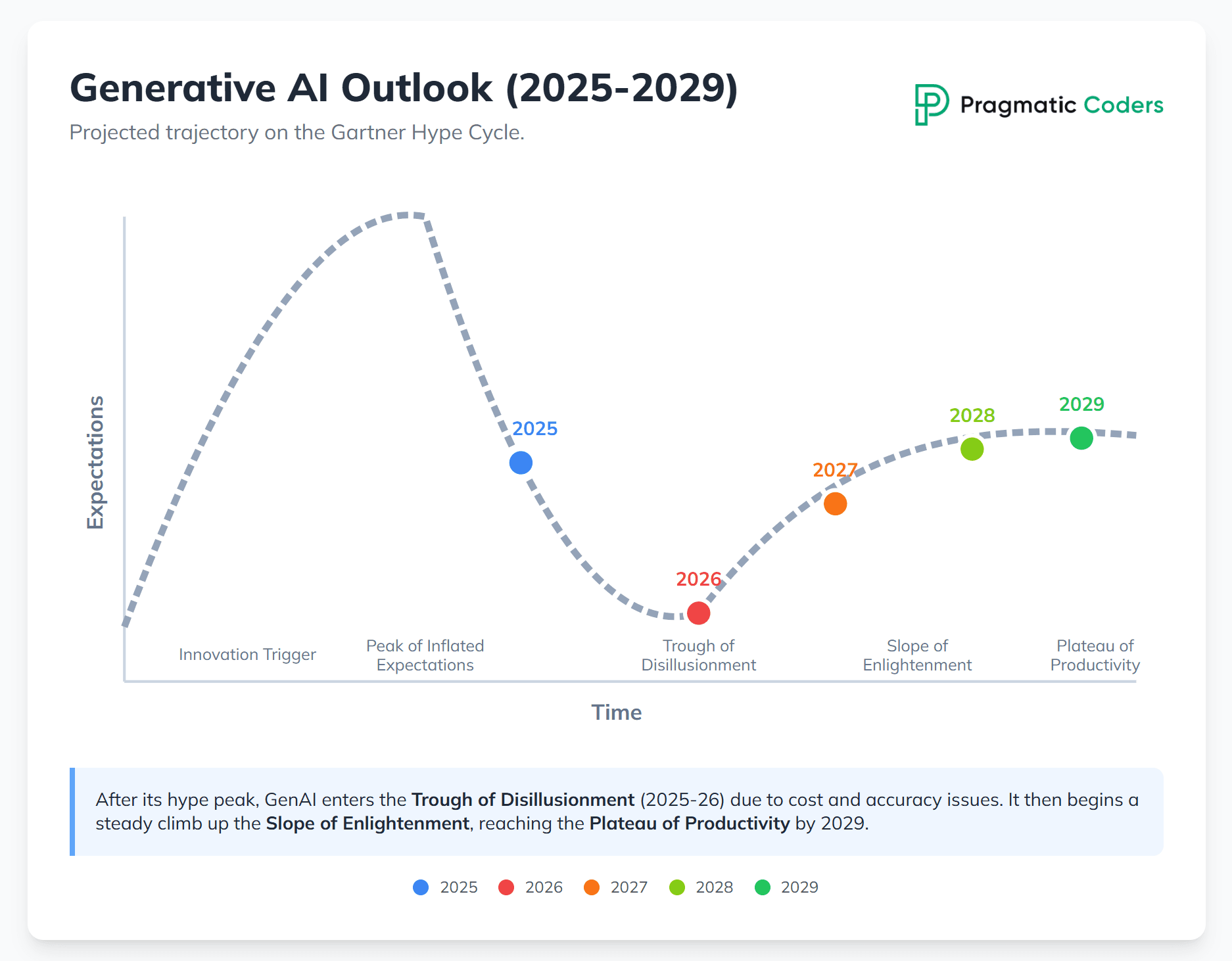

| Generative AI | Trough of Disillusionment | Trough (late) | Slope of Enlightenment (early) | Slope (late), growing use cases | Plateau of Productivity (early) |

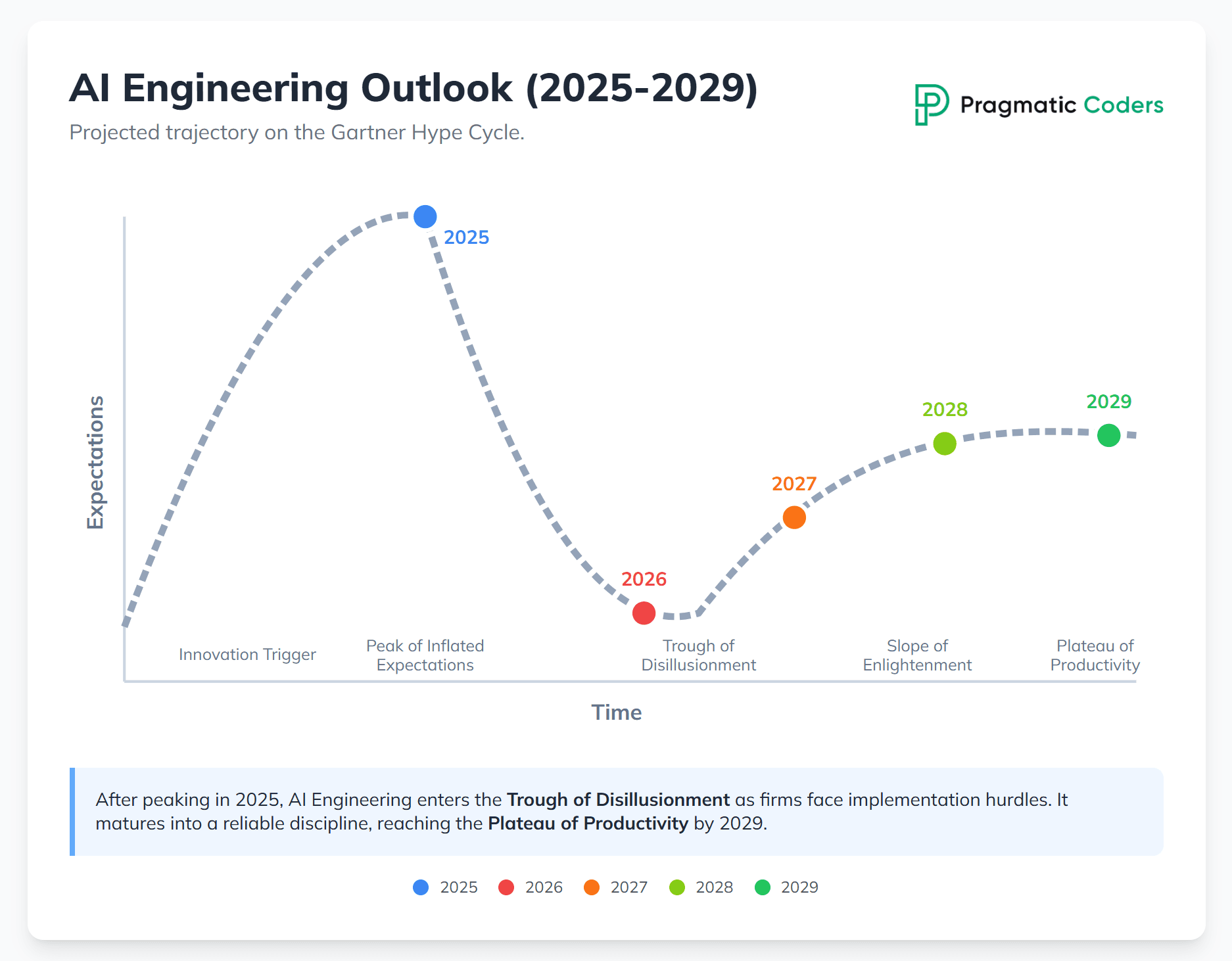

| AI Engineering | Peak of Inflated Expectations | Trough of Disillusionment | Slope (early), process maturity | Slope (late), scalable adoption | Plateau of Productivity |

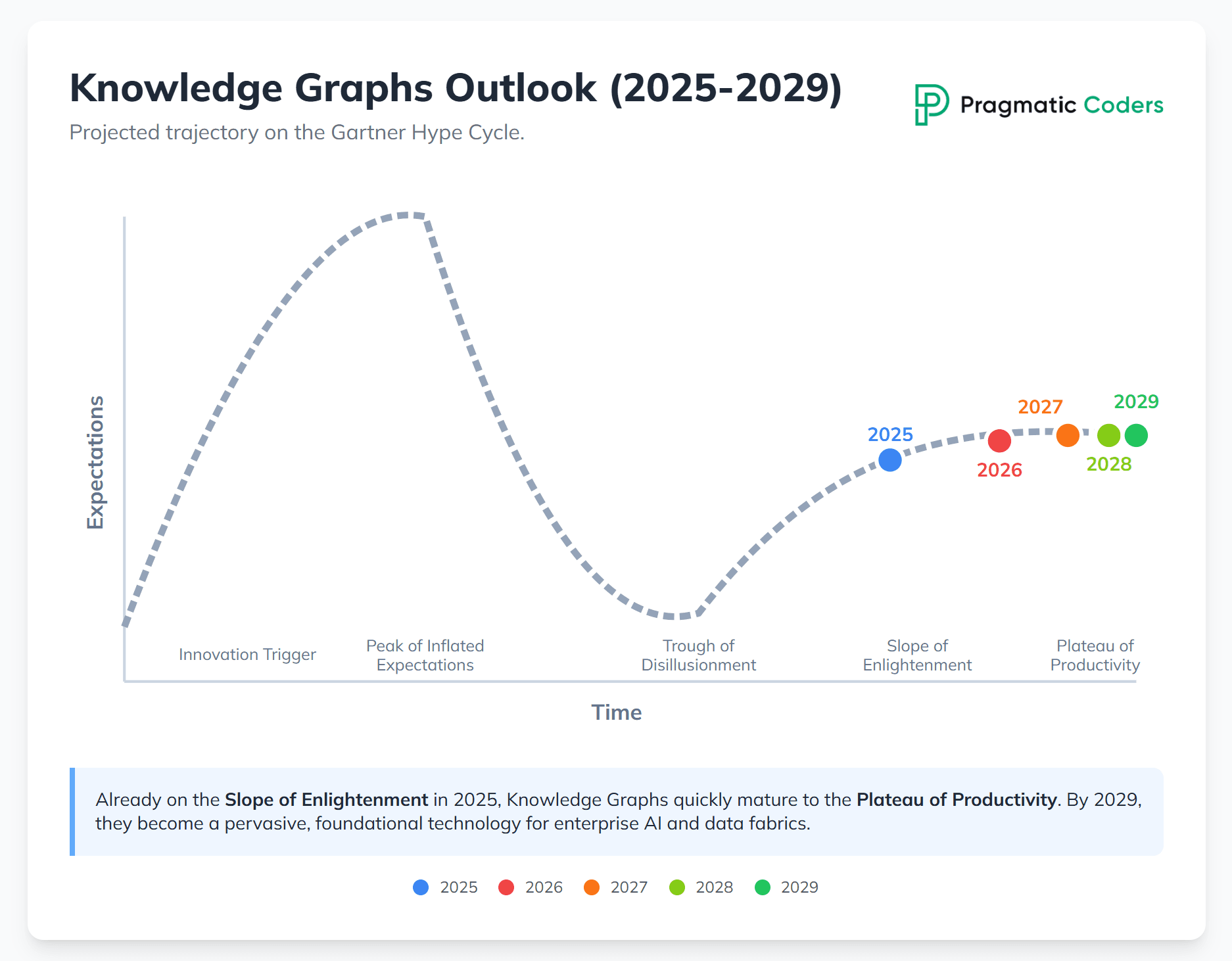

| Knowledge Graphs | Slope of Enlightenment | Slope (late) | Plateau of Productivity (early) | Plateau (established) | Post-plateau (pervasive use) |

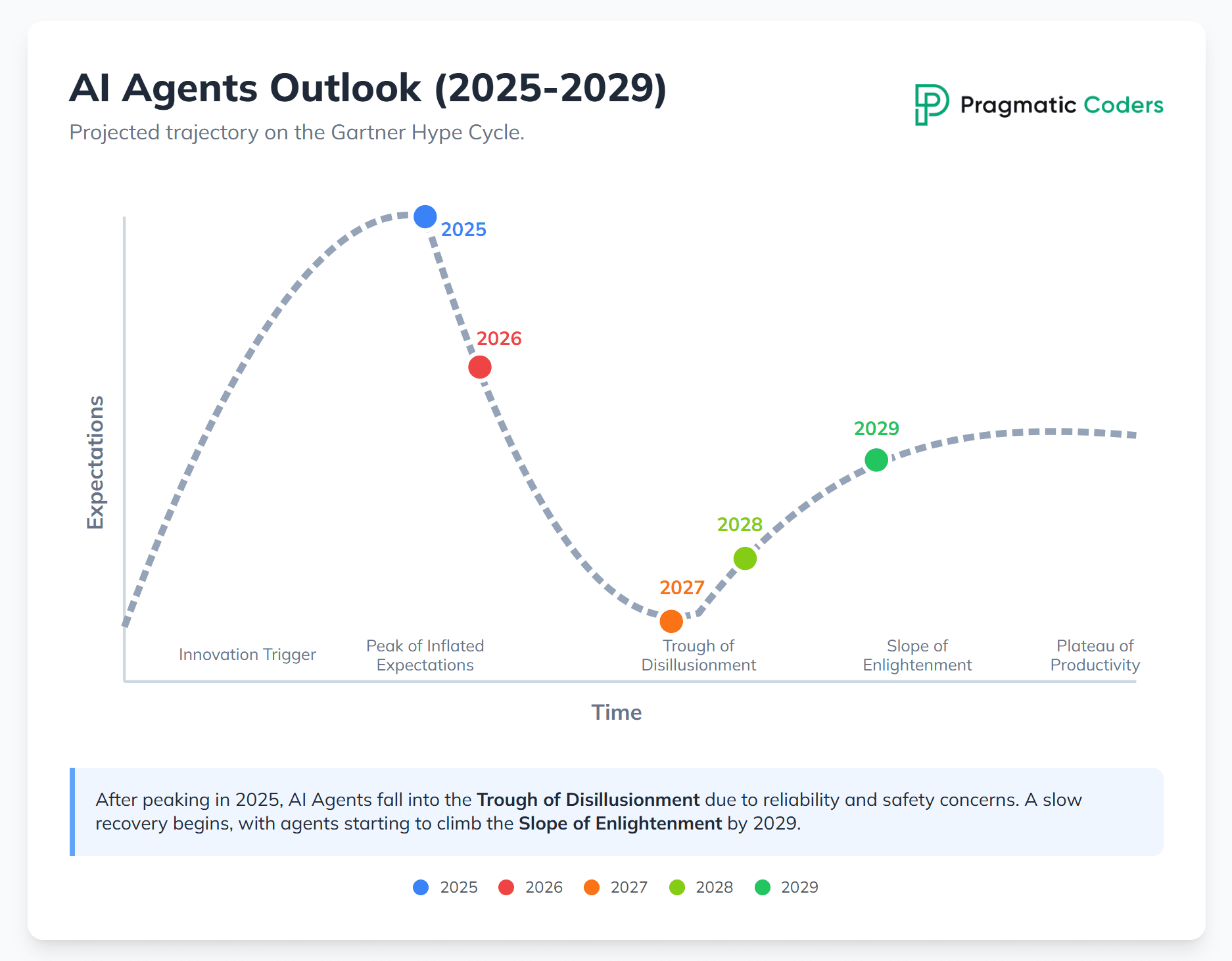

| AI Agents | Peak of Inflated Expectations | Peak (declining) | Trough of Disillusionment | Trough (late), niche advances | Slope of Enlightenment (early) |

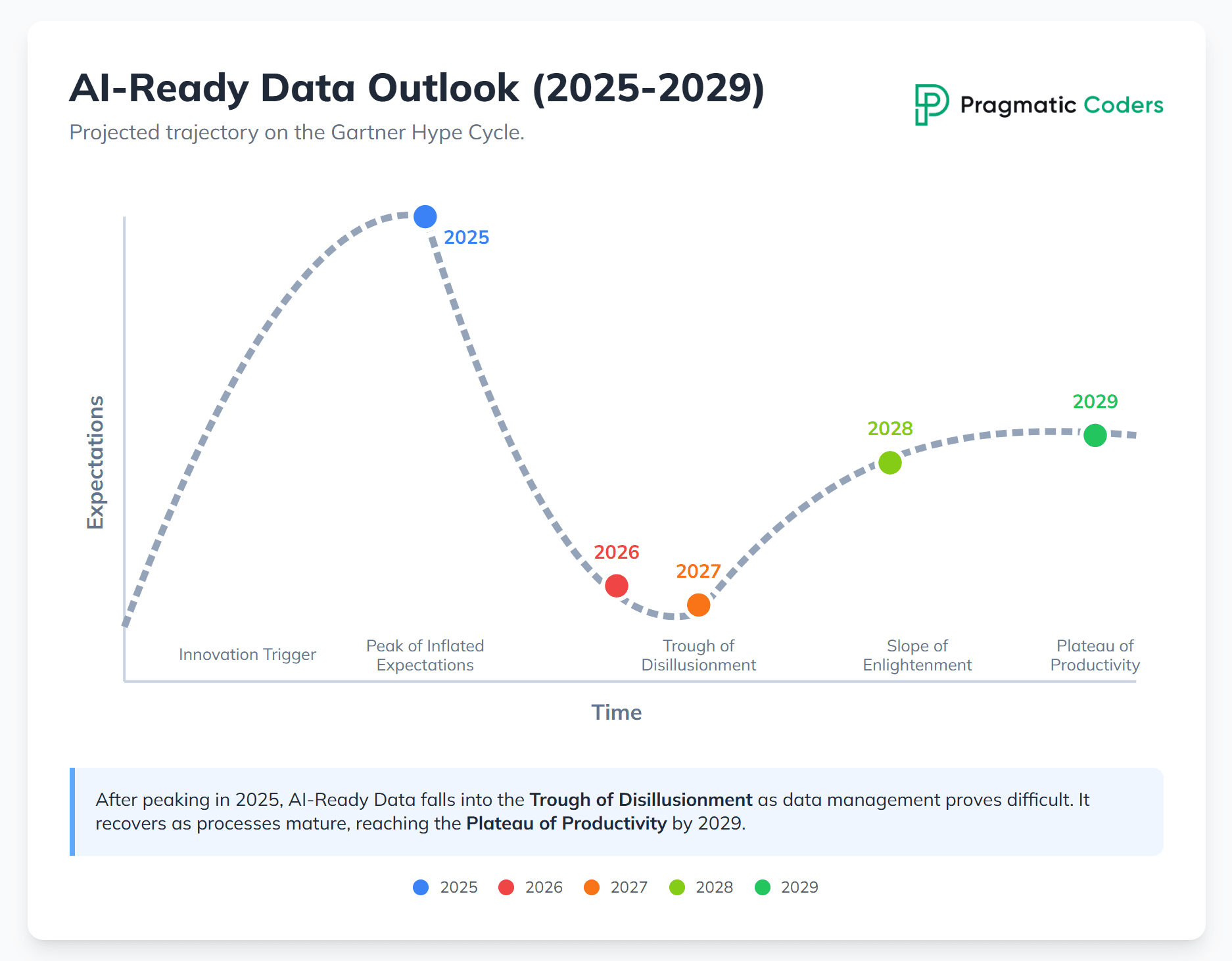

| AI-Ready Data | Peak of Inflated Expectations | Trough of Disillusionment | Trough (bottom), process shift | Slope of Enlightenment | Plateau of Productivity |

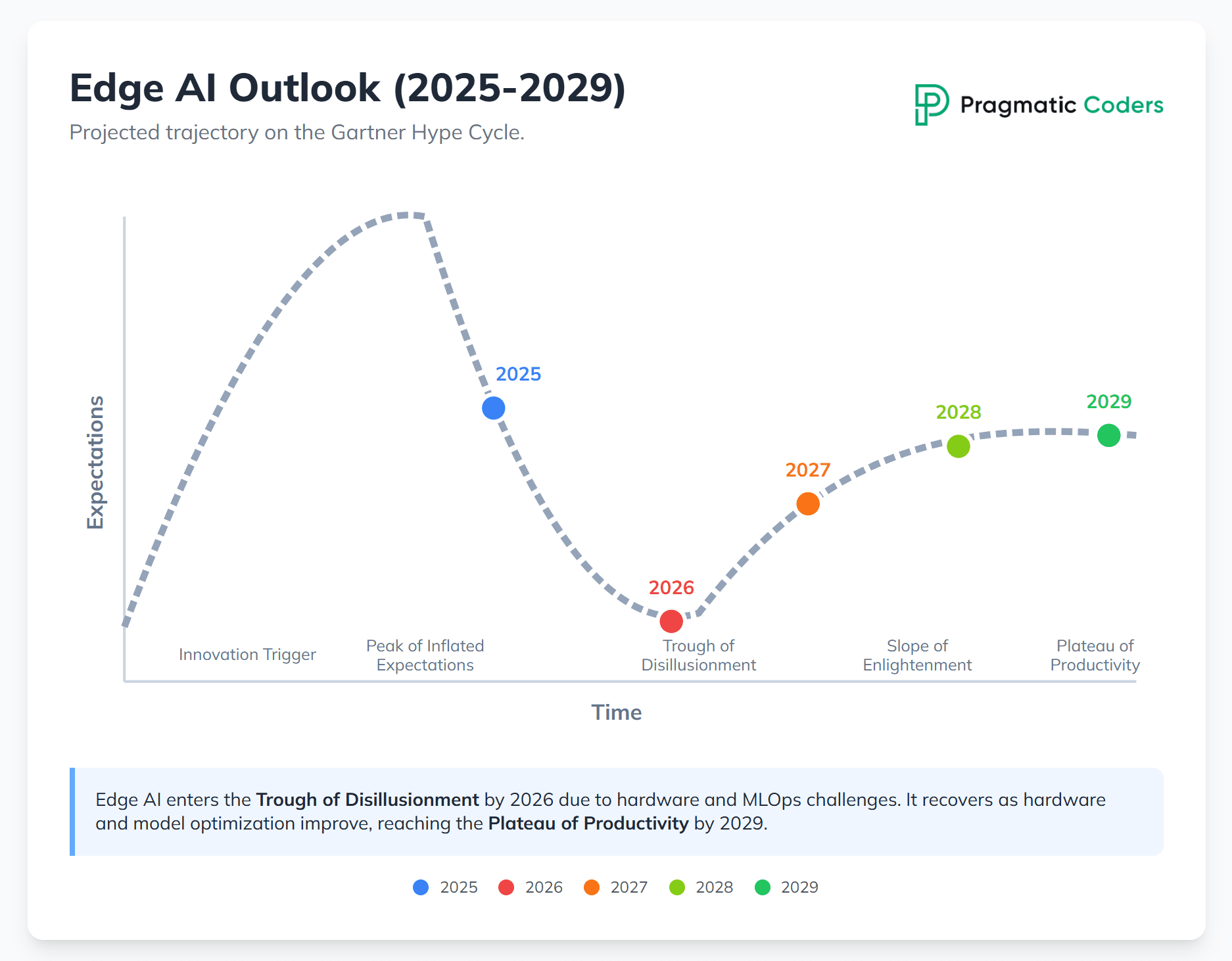

| Edge AI | Trough of Disillusionment | Trough (early) | Slope of Enlightenment (early) | Slope (late), real-time adoption | Plateau of Productivity |

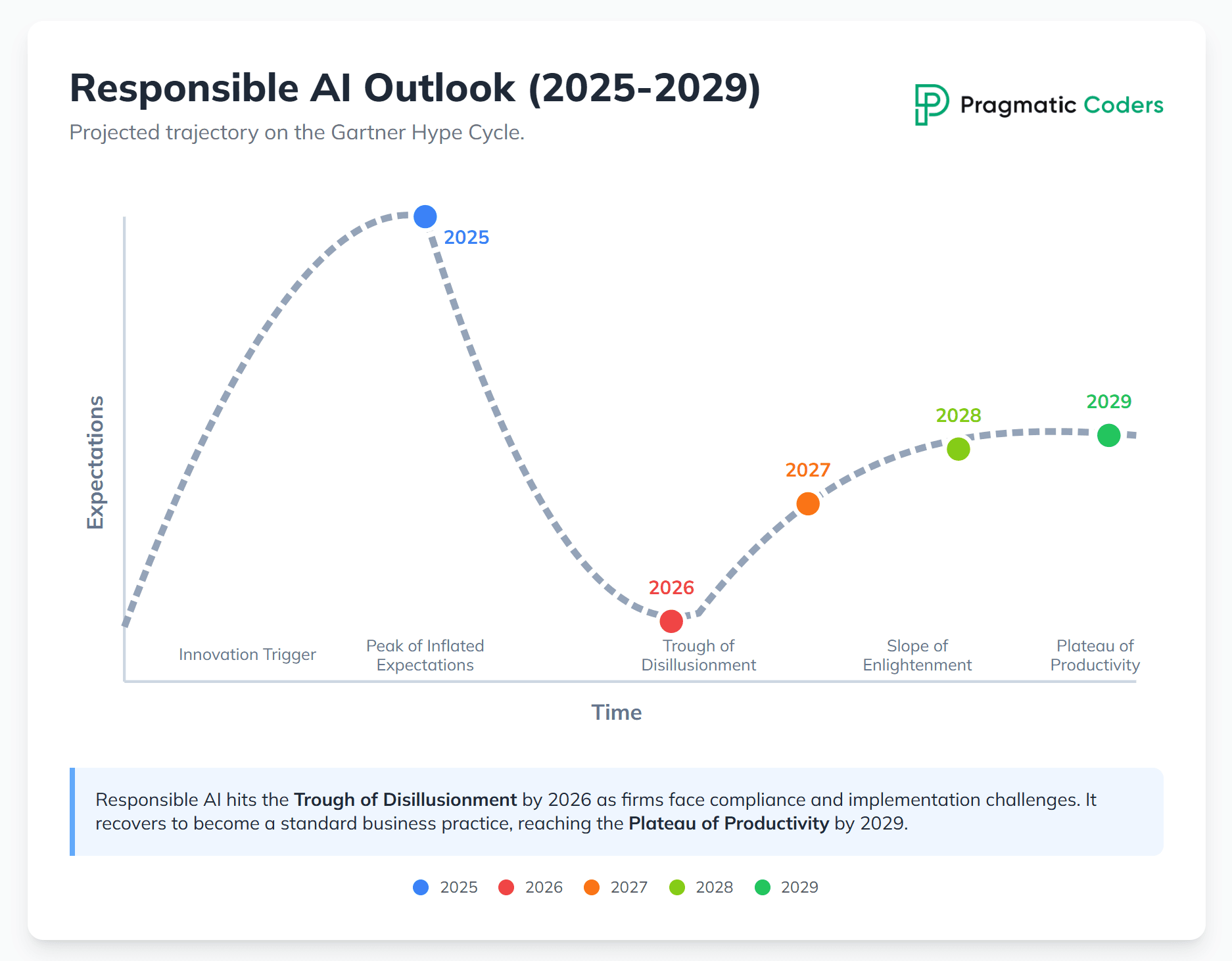

| Responsible AI | Peak of Inflated Expectations | Trough of Disillusionment | Slope of Enlightenment (early) | Slope (mid), audits standardize | Plateau of Productivity |

So, where does this leave us?

Generative AI (GenAI)

- Current stage (2025): Trough of Disillusionment.

- Future outlook: Entering the Trough is a natural, necessary step toward maturity. Over the next few years the spotlight will shift from excitement about potential to solving the real-world blockers that prevent mass adoption. Companies will focus intensively on proving ROI and try to align with with governance challenges (hallucinations, bias, and regulations). We can expect less media noise and more pragmatic deployments in narrowly defined, high-value use cases. And once these problems are solved, GenAI will become a deeply embedded, standard enterprise tool. In one of their 2023 press releases, Gartner projected >80 % production adoption by 2026. In 2025, we get additional projection: By 2028, more than 95% of enterprises will have used generative AI APIs or models, and/or deployed GenAI-enabled applications in production environments.

AI Engineering

- Current stage (2025): Peak of Inflated Expectations.

- Future outlook: AI Engineering (building reliable, scalable, and production-ready AI systems by integrating data, models, and infrastructure into real-world workflows) will soon dip into its own Trough of Disillusionment. Organizations will discover that unifying DataOps, MLOps and DevOps pipelines is harder and pricier than it seemed. Yet its strategic importance will only grow; it is viewed as the fundamental fix for GenAI’s growing pains. Expect rising investment in MLOps platforms and process standardization (2027-2028) that ultimately paves the way for systematic, reliable enterprise-wide AI delivery (2029).

Also check: AI coding in software development

Knowledge Graphs

- Current stage (2025): Slope of Enlightenment.

- Future outlook: Knowledge Graphs are Knowledge graphs are structured representations of facts and relationships that provide context and support reasoning in AI systems. Right now, they’re steadily climbing toward the Plateau of Productivity. Their value as a trusted context layer for unreliable GenAI models becomes clearer and clearer. In the near term, they’ll become standard in advanced AI stacks, especially where reliability and explainability matter. They’ll also support emerging approaches like causal and neuro-symbolic AI (both in the Innovation Phase in 2025), so that systems don’t just generate content, but structure and understand it.

AI Agents

- Current stage (2025): Peak of Inflated Expectations.

- Future outlook: As the new hype driver, AI agents will follow the classic curve. After the current wave of crazy enthusiasm and investment, they will inevitably slide into the Trough of Disillusionment within the next 2–3 years. Companies will find that building fully autonomous agents capable of reliably executing complex business tasks is super hard. Challenges will center on security, integration with legacy systems and managing unpredictable behavior. Yet the long-term vision (moving from AI that assists to AI that acts) is so compelling that investment will continue, and agents will ultimately find their place automating ever more complex business processes. The future is flexible, Lego-like AI systems. This architecture lets you plug and play different models (large or small, open-source or proprietary), data sources and tools. It’s a profoundly pragmatic approach: it prevents vendor lock-in, increases agility and lets you use the best tool for each job—key to delivering maximum value with minimum budget in record time. Yet. it will probably happen only in 2029.

Also check:

- 200+ AI agent stats

- Best tools to build AI agents in 2025

- How to build an AI agent (no coding required)

- AI system vs AI agent vs AI model – which one do you need?

- A list of top 10 AI automation services to help you decide which one your company needs

AI-ready data

- Current stage (2025): Peak of Inflated Expectations.

- Future outlook: Just like AI engineering, the “AI-ready data” (the concept of clean, labeled, and structured data that’s prepared for use in training, validating, or deploying AI models) will soon fall into the Trough. Frustration won’t stem from the idea itself (which is sound) but from the sheer work, cost and organizational complexity required to deliver it. Firms will have to face the hard realities of data quality, governance and integration. Over the next few years the focus will shift from talking about “AI-ready data” to real investment in data engineering, data-management platforms and data-governance processes. Yes, it’s unglamorous but essential work that will become the top priority for any organization serious about scaling AI.

Edge AI

- Current stage (2025): Trough of Disillusionment.

- Future outlook: Edge AI runs AI models directly on local devices (like phones or sensors), enabling real-time decisions without relying on cloud processing. This once-hot concept will linger in the Trough longer than originally expected, though. But this doesn’t mean stagnation. Once it gets out of the very bottom of the Trough, we can expect a gradual climb up the Slope of Enlightenment, fueled by the growing need for real-time data processing in manufacturing, logistics and retail, plus evolving partner ecosystems and platforms that make large-scale deployment easier. Nevertheless, we’ll have to wait for the Plateau until 2029.

EDIT 19.08.2025

Big thanks to Jeff Fryer for proving AI’s deep research options aren’t this good yet 😁 And for showing there’s still this 1% of the Internet that’s alive and people actually read it.As mentioned in the intro, the future projections described in the article were written based on Kimi K2, Gemini, and ChatGPT’s research and mapped onto Gartner’s hype cycle.

Cool if you agree, but even better… if you don’t. Thanks for challenging the content 🤲Check Jeff’s insight on Edge AI below:

Responsible AI

- Current stage (2025): Peak of Inflated Expectations

- Future outlook:

By 2026, Responsible AI enters the Trough of Disillusionment. In 2023–25 organizations rushed to publish ethical principles… but the implementation turned out more complicated than it seemed: bias fixes break in production, model cards go unread, and audit trails exist only in slide decks. And regulators don’t accept just good intentions: laws like the EU AI Act start issuing fines. Ethics committees are questioned, budgets frozen, and “Responsible AI” begins to feel like an expensive checkbox rather than a strategic advantage

Yet, between 2027 and 2028, the idea of Responsible AI might start climbing the Slope of Enlightenment. In the earliest scenarios, AI Governance Platforms will start reaching the Plateau and offer plug-and-play bias dashboards, automated model cards in CI/CD, and standardized “Responsible AI” squads. Also, universities will probably embed ethics in AI curricula.

By 2029, the shift is complete. Responsible AI moves from theory to practice. Every model must pass fairness, privacy, and explainability checks before release.

- What to do now: Start building the muscle: ensure ownership, fairness and explainability in ML pipelines, and treat Responsible AI as product quality (not just compliance). It’s better to be safe now than sorry later.

Learn more:

What does it mean to you?

Understanding these trends is one thing; acting on them is what matters. As your product partner, here’s a piece of advice:

Gartner reflects the market’s emotions. The winners won’t be those hyping about AI agents (check: Bandwagon effect), but those who now invest in data infrastructure, AI engineering, and responsible frameworks.

We’ve mapped the Gartner curve; now it’s time to decide what you’ll actually do with it:

- You’re probably being sold the wrong thing first

Every vendor is pitching “AI Agents” or “AI-ready data lakes” right now. Start with a use-case that already works (e.g., a process automation enhanced with AI). - Knowledge Graphs = cheap insurance

If you have any compliance, audit or “explain-this-to-the-board” requirement, we bolt a lightweight knowledge graph onto your existing database in a couple of sprints. It’s not sexy, but it de-risks hallucinations and cuts future re-work. - AI Engineering is the bill you’ll pay anyway

Whether you pick GenAI or Agents, you’ll still need CI/CD for models, drift detection and rollback. We build that pipeline once, so the next model swap is a one-day job instead of a six-week fire-drill. - Responsible AI = faster go-live

Instead of treating “AI TRiSM” as a separate line item, we embed guardrails directly into the product: red-team prompts, audit logs and bias tests ship with v1. Result: regulators sign off faster, your legal team sleeps at night, and you still hit the market before the competition. - Edge AI is still a waiting game

Unless you’re running robots in a warehouse, park Edge AI for 2027.

If you’d like a second opinion on your GenAI roadmap – or hands to build it – reach out. Our cross-functional squads (product managers + engineers + UX) will help you decide which AI solution is right for you, and then – we’ll just build it. Send us a message or schedule a call now.

FAQ

Why did you pick exactly these seven AI technologies?

They are the only AI topics mentioned in Gartner’s official 2025 press releases and analyst posts, and they map directly to the real-world decisions our clients face today.

Which technologies are the safest bets for 2026?

Knowledge Graphs, because they are already climbing the Slope of Enlightenment and solve unavoidable scaling pains.

How soon will Edge AI be worth investing in?

Not before 2027-28. Hardware costs and fragmented standards keep it in the Trough; we recommend monitoring chip prices and 5G/6G roll-outs, then piloting only if latency is mission-critical.

What does 'Trough of Disillusionment' mean for my CFO?

Vendors will oversell, pilots will fail, and budgets get cut—unless you scope a narrow, measurable use-case first. We start with a fixed-price sprint to prove ROI before scaling.

Is Responsible AI just red tape, or does it accelerate delivery?

When built in from day one (audit logs, bias tests, guardrails), it shortens regulatory sign-off and removes legal blockers, letting you ship sooner.

How fast can Pragmatic Coders move from roadmap to working code?

After a 30-minute discovery call we scope a two-week sprint with a fixed price; you get a running, observable feature—not another slide deck.