From UX to AX: Designing for AI Agents—and Why It Matters

Designing websites, cloud applications, or native web applications for AI agents—systems that autonomously perform tasks with minimal human intervention—requires a paradigm shift from traditional user-centered design to agent-centered design. This marks a dramatic and revolutionary change in how we think about designing digital products.

The early days of interface design focused heavily on function and task execution, where the roles of UX/UI or Product Designers didn’t even exist—what mattered was the code that allowed tasks to be completed. As computers and phones became more widely accessible, it became clear that User Experience was an indispensable part of software development. Today, in the era of the AI revolution and the growing development of AI Agents that perform tasks on behalf of humans, digital product design is shifting from being human-centered to AI-centered.

Key Points

|

Web design has come full circle

As Professor Czesława Frejlich noted, interface design (like technology itself) evolves over time. Design is something that changes because needs change—and so does technology. At the beginning of the computer era, user interfaces were almost entirely text-based—command lines, logs, configuration files, and detailed output filled with contextual information. These interfaces were rich in text but created mainly for technical users.

Apple IIe computer from 1983 with Command Line Interfaces

When computers became cheaper and more accessible, design shifted toward graphical user interfaces (GUI). Visual elements, icons, minimal text, animations, and a high level of user interaction became the foundation of how we design digital interfaces today. The goal became simplicity, usability, ease of use, and accessibility – often at the expense of detailed information.

Modern Apple computer running macOS Monterey

Humans prefer visual communication. AI prefers text. Future interfaces will be text-based.

For thousands of years, the human brain has processed visual information faster than text (before writing existed, we communicated through gestures, facial expressions, and drawings). Our brain can recognize an image within milliseconds. Visual cues in user interfaces – icons, data graphs, or button colors like green and red – are processed by our brains the fastest.

AI agents don’t “see” and lack the human ability to interpret the world visually. Traditional language models were trained primarily on text, and although the newest AI systems are increasingly capable of integrating text and image processing, ultimately, everything in AI is reduced to text and numbers. AI must “translate” an image into text to “think” about it. Even when AI “sees” a red button, it’s actually analyzing RGB values and generating the text: “this is a red button.” AI communicates primarily through text, and future graphical interfaces will be built on vast amounts of text and numerical data.

Dual-channel interface design: for humans and AI agents

The internet was built exclusively for human eyes and minds. Interfaces today are designed to be seen, clicked, visually interpreted, read, and to evoke emotions. We still design interfaces across multiple screen sizes like mobile, tablet, and desktop, striving for visual consistency and clear information hierarchy (although many popular digital products still fall short – but that’s a topic for another article).

Now, AI agents are gaining the ability to “navigate” the web, and design should no longer be reserved only for humans, but also accommodate AI agents. The interfaces of the future will need to be designed to work in symbiosis with both humans and AI agents.

Interfaces of the future for AI

Current interface design

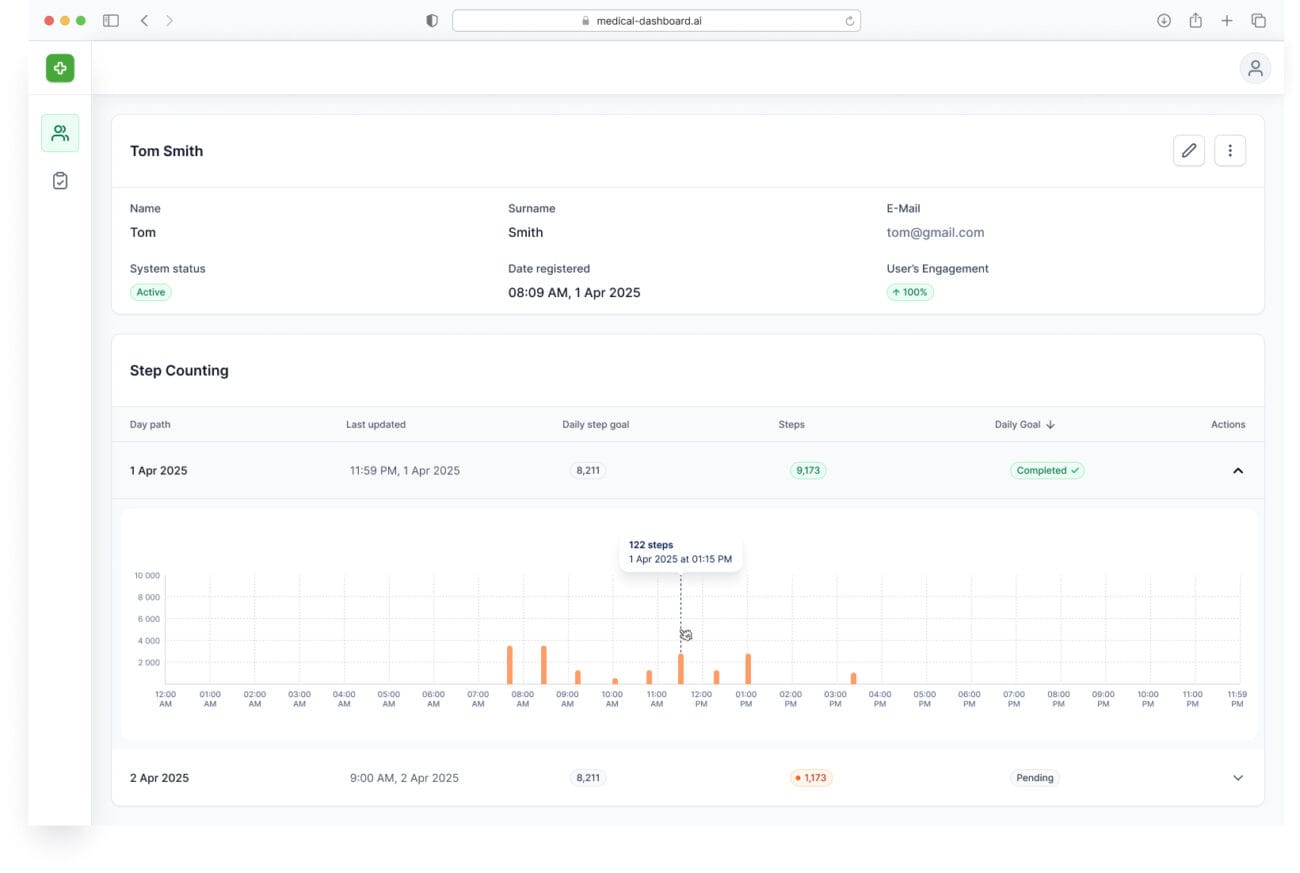

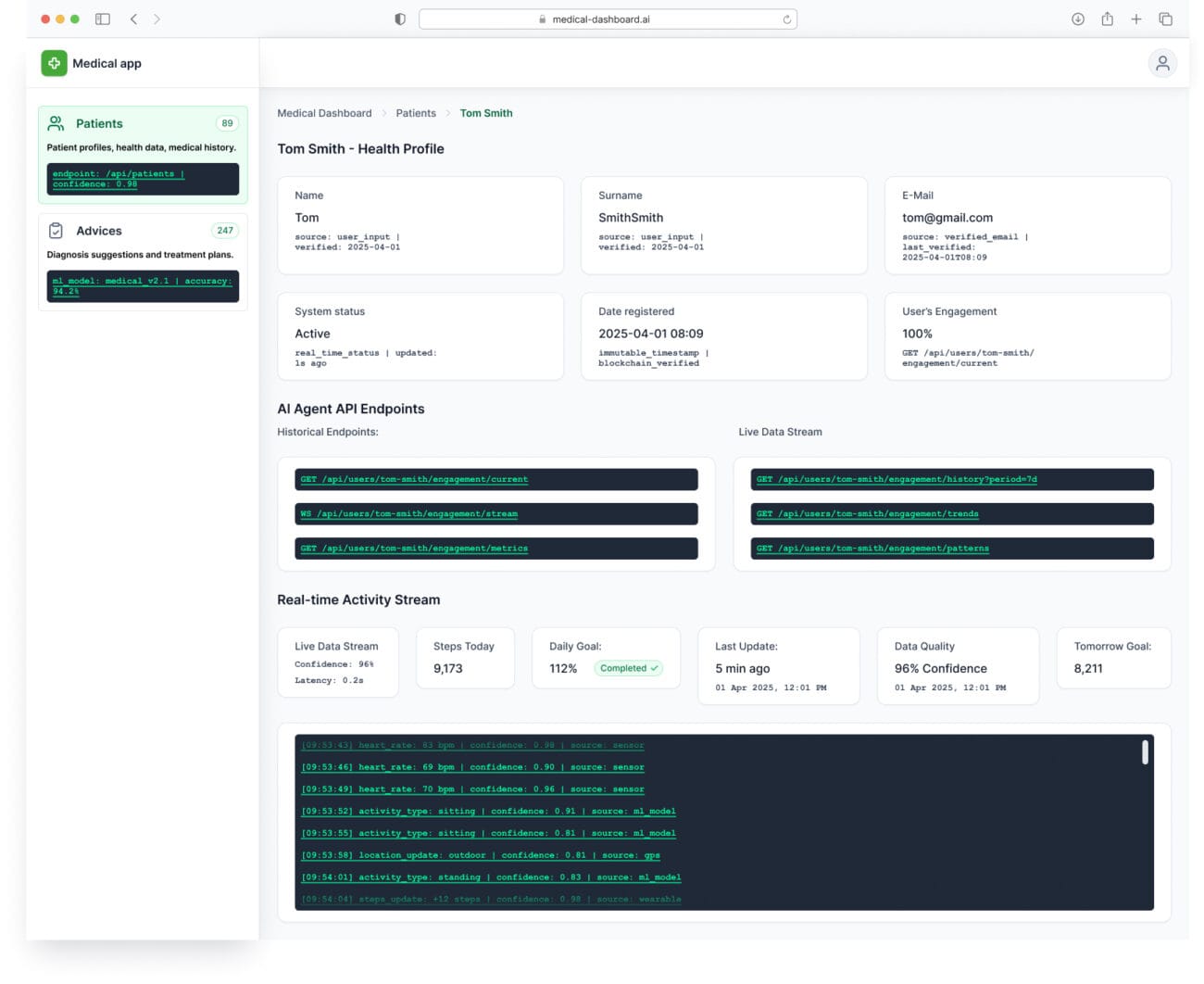

Let’s examine an application we worked on at Pragmatic Coders. (For the purposes of this article, some features have been omitted and data anonymized.) It’s a medical application for doctors, who – thanks to AI – can automatically send treatment recommendations to their patients and manage treatment plans. Every element of the native app was designed for human users, who manually analyze health data using charts and make decisions based on the visualized information. Some metadata related to health data is hidden to avoid distracting the user.

As you can see, the user interface is quite tabular. There is a section with user metadata and nested data tables containing health information. Additionally, the doctor can quickly analyze step count data using bar charts.

Interface for the AI Agent

An interface for an AI agent will require more contextual data. Additionally, we must remember that modern LLMs (Large Language Models) or LRMs (Large Reasoning Models) do not handle quantitative, structured data – such as spreadsheets, tables, or charts – very well. Anyone who has tried uploading large Excel files to popular AI chat tools knows that the responses are often unusable and frequently inaccurate. LLMs and LRMs struggle with complex numerical relationships.

For example:

- A page in a medical application marked up with JSON-LD allows the AI to recognize an object (e.g., metadata about steps), its quantity over time (e.g., March 23, 2025, 12:00 PM, step count: 12), etc.

- However, if step count trends (or any other trends such as product sales or stock price increases) are only available in an Excel file, the LLM may struggle – unless the data is transformed into natural language summaries, e.g., “Step count increased by 10% in Q1.”

Differences in UI for AI Agents and Humans

Today, we must begin focusing on designing two versions of interfaces—not just for humans, but also for AI agents. It’s no longer enough to provide dark mode or various responsive layouts (Desktop, Tablet, Mobile). A simplified, text-based version for AI agents is becoming essential—one that also supports human oversight and navigation.

Human + AI Agent = Different Cognitive Needs

- Humans need visual hierarchy, intuitive navigation, and emotional context.

- AI agents require structured data, clear API endpoints, and predictable formats with continuously updated metadata.

Performance Optimization

- Interfaces for humans can be visually rich – but slower.

- AI requires fast, minimalist communication protocols.

AX Design will transform how we build digital products – shifting from a “user-first” to an “agent-first” mindset, where designing for AI agents becomes just as important as UX for humans. This new design paradigm will also change how people interact with interfaces. Their role will no longer focus on completing tasks directly within a system, but on collaborating with AI agents.

As a result, designers will need to focus on the experience of working with AI agents, not just traditional user interactions.

A Revolutionary Shift in UX and UI Design for Human Applications

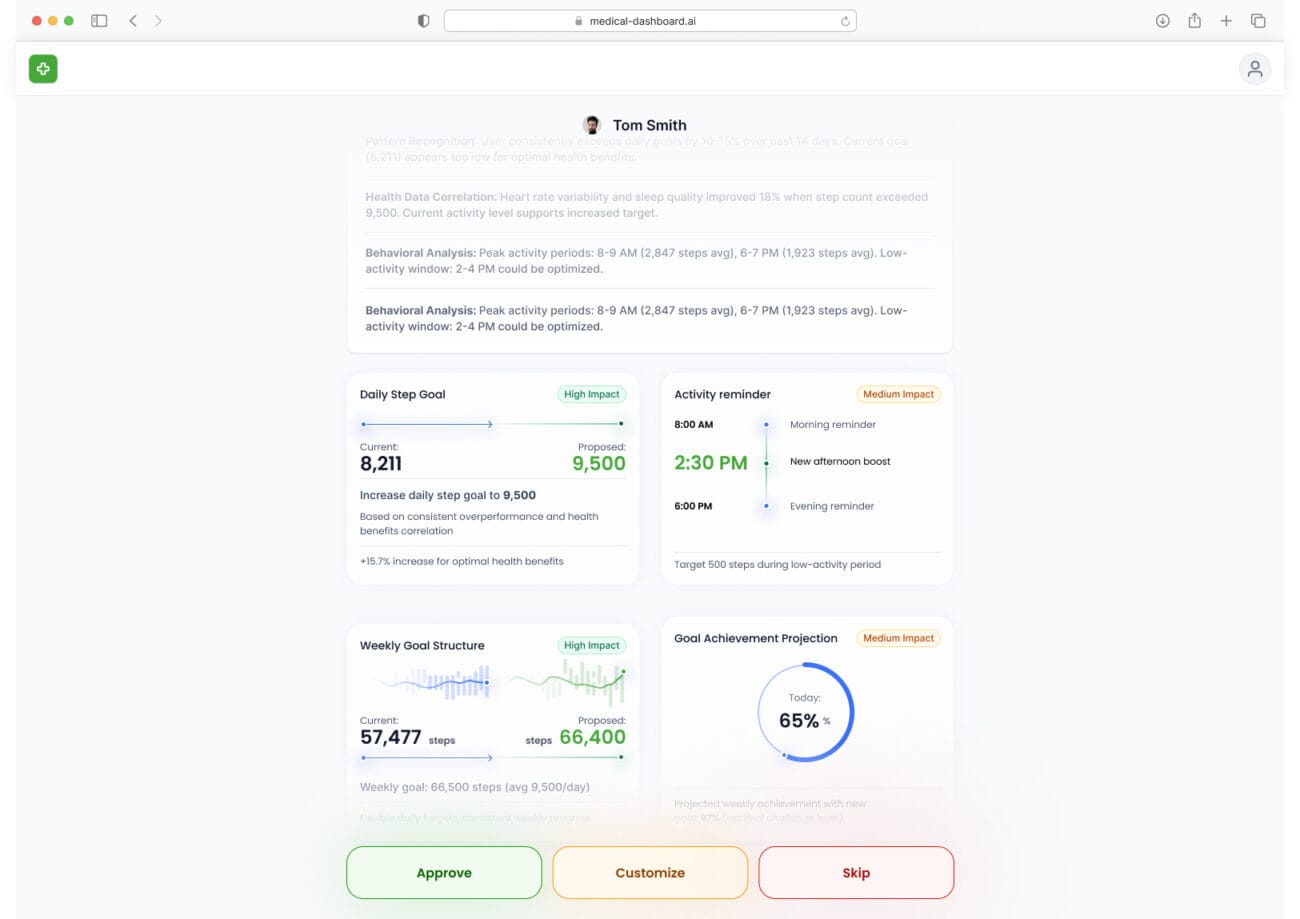

Currently, the primary role of UX and UI designers is to facilitate users in making decisions. In the age of AI agents, the user will no longer be the sole executor of tasks—they will become a supervisor of decision-making processes carried out by the agent. Today, users verify data themselves and make decisions based on paths designed by UX professionals. In the future, the user experience will focus primarily on supervising and verifying the actions of the AI agent.

- Current process of human interaction with interfaces:

- Human as executor, human as decision-maker

- Future process:

- AI as executor, human as decision-maker

In the era of AI agents, the user stops being the only one executing tasks and becomes the overseer of decisions made by the agent. For example:

- The agent logs into the CMS of a health application and creates a draft medical recommendation based on detailed data.

- The agent selects images and health metadata from a library and generates alternative recommendations.

- The agent prepares the medical recommendation.

- The agent waits.

- For the user.

- For the decision.

- The human makes the decision.

It is still the human who approves the publication, evaluates the input data, and – most importantly – verifies the agent’s reasoning: what data was used, what assumptions were made, and what strategy was planned. They will also need to check whether the data used to train the LLM or LRM has been contaminated with flawed training data. The user journey within the system will be drastically shortened, and the number of actions required (e.g., number of clicks, etc.) will be minimized and focused solely on confirming the AI agent’s work.

The Future of Interfaces and the Role of the UX Designer

The role of the UX designer will shift from designing for “user-app interaction” to designing for “user-agent collaboration.” We believe that the user’s position – traditionally the central figure for whom systems are designed—will diminish in favor of enhancing the AI agent’s performance. However, the user’s role will remain essential for verifying critical AI actions.

Designers will be responsible for:

- Creating interfaces that allow AI agents to operate efficiently

- Designing interfaces that support clear and transparent human-agent collaboration

- Building user-facing interfaces that give users a greater sense of control over the AI agent’s work

- Developing tools and solutions that help users feel more confident in the AI agent’s decisions

Future interfaces that humans interact with will largely revolve around just two core actions:

- Approve the AI agent’s work

- Reject the AI agent’s work

Within these two actions, designers must ensure users can easily access and understand the AI agent’s reasoning process. The human will be reduced to the role of a decision-maker, not a task executor.

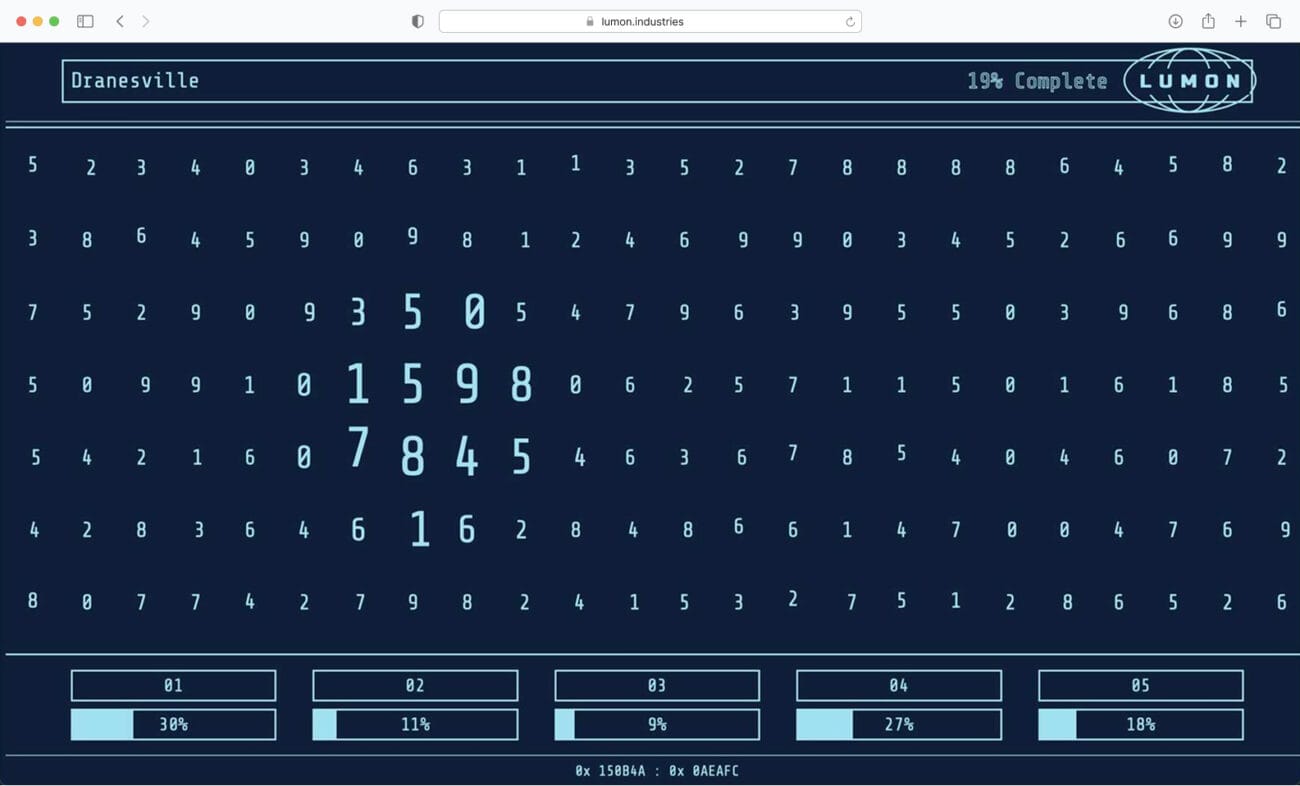

Future UX/UI Will Be the Exact Opposite of How Mark S. Works in Severance

In the Apple TV series Severance, the protagonist Mark S. works with a computer interface that is mysterious, minimalist, severely limited in functionality, offers minimal visual data, and lacks context or explanatory information. The interface is intentionally incomprehensible – the user doesn’t know why they’re doing something, only what they are told to do. It’s an “interface as a cage” – designed to control the user, not to support their understanding.

Future interfaces, in contrast, will be designed to empower the user – especially as a verifier of AI behavior—not to obscure or restrict their understanding.

Sounds familiar? That’s exactly what today’s interfaces look like. Here are some examples:

- Excel – Limited visual representation of data. A cage without additional context.

- Duolingo – Designed to control the user rather than support their understanding or thought process. Lacks context and explanatory information.

- Salesforce – An application as a cage. The user doesn’t know why they are doing something in the system, only what they are supposed to do.

- Modern CMS platforms – Minimalist and extremely limited in functionality. If you want any additional features, you have to pay or license other software

What will future human interfaces look like?

The future of human interfaces will focus on supporting human decision-making based on tasks performed by AI agents. AI will act as the executor, while the human will serve as the decision-maker. The interface for humans will need to fulfill 2 main goals:

- Serve as a decision-making interface – The interface will present data in a clear and visual form (since humans prefer visual communication over text). Data will be displayed in a minimalist way, and the actions the user must take will be limited to approving or rejecting the AI agent’s work.

- Serve as a supervision interface – The interface will include the full history of the AI’s actions, its reasoning process, the data it used to make decisions, its simulations, and the ability to trace or reverse steps in its decision-making.

We believe that the future interface, much like Mark S.’s computer in Severance, will be visually and structurally minimalist and procedural (approve or reject), with AI performing most of the work. Conceptually, however, it will be radically different – deeply grounding the user in additional context, data, meaning, and visuals. The future of interfaces won’t be about hiding parts of the system or locking the user into rigidly designed paths. It will be about explaining the AI agent’s work, supervising it, collaborating with it, and ultimately approving, editing, or rejecting the work it has performed on our behalf.

Accessibility Principles Have Never Been More Important

In the future, interfaces will no longer be designed solely for humans—but also for AI agents. AI agents will be the executors of our work, while our role will be limited to decision-makers and verifiers of the work performed by a “being” that cannot see, hear, or feel. Accessibility for such entities will become crucial.

Imagine a world where everyone has some form of limitation—physical, sensory, or cognitive—that makes it difficult or even impossible to use the internet effectively. Some people are visually impaired (blind), others are unable to move (e.g., fully paralyzed), and still others have hearing difficulties (deaf).

Accessibility is not a luxury or an add-on. It is a foundation. A website or application should exclude no one – every user should have equal access to content and functionality.

To better illustrate this, consider AI agents. In many ways, they are like digital beings who – just like people with disabilities – cannot see, hear, or move. They require full structural and semantic support to understand content and context.

That’s why, in the future, accessibility will be critical not just for humans but also for AI agents. It will enable them to navigate applications effectively, understand the intent behind various features, and operate within an increasingly automated world.

Use Descriptive URLs

Screen readers often read URLs (e.g., in links or footers). A descriptive URL helps users – and AI agents – better understand where the link leads.

Example:

❌ /prod?id=61i122xs

This says nothing to the user or the AI agent. The agent only sees a random parameter and doesn’t know what the page is about. It must take extra steps—following the link, rendering the page, analyzing the content. This consumes computational power and slows down the analysis process.

✅ /products/lighting/for-kids/smart-lighting/wireless-motion-sensor

This immediately communicates the category, the target audience, and the specific product—not only for humans but also for AI agents. The agent instantly knows what product this is, can fetch specifications or similar items, and significantly speeds up the analysis.

Use More Detailed ARIA Labels

ARIA (Accessible Rich Internet Applications) labels are essential not only for people with disabilities but also for AI agents analyzing and interpreting user interfaces. These labels provide additional context for both humans and AI.

Example:

❌

<button><i class=“icon-1245”></i></button>

<button aria-label=”Add product to cart”>

<i class=”icon-cart“></i>

</button>

The aria-label describes the button’s function for both screen readers and AI agents, helping them understand this is for adding a product to the cart.

Logical Heading Hierarchy (H1, H2, H3) Is a Must

A logical heading structure (H1 → H2 → H3…) is mandatory—not only for accessibility, where screen reader users rely on it to understand the importance and order of content—but also for AI agents. Thanks to structured headings, AI agents and LLMs can:

- Understand the main topics and subtopics – H1 as the page title

B. Assess importance and relationships between sections – H2 as a subtitle

C. Navigate the document effectively – LLMs scan for keywords and define concise topic summaries

Without logical heading structure, AI agents see a chaotic block of text, making it harder to interpret.

<h4>Technical Specifications</h4>T

<p>Automatic upper arm blood pressure monitor…</p>

<h1>Omron X3 Comfort</h1>

<h3>User Manual</h3>

<p>Place the cuff above the elbow…</p>

<h2>Clinical Data</h2>

<p>CE Certificate, ESH validation…</p>

<h1>Samsung Health Monitor Blood Pressure Device</h1>

<h2>Technical Specifications</h2>

<p>Automatic upper arm monitor with irregular heartbeat detection…</p>

<h2>Clinical Data</h2>

<p>CE Certificate, validated by ESH protocol…</p>

<h2>User Manual</h2>

<h3>Fitting the Cuff</h3>

<p>Place the cuff 2–3 cm above the elbow…</p>

<h3>Starting the Measurement</h3>

<p>Press START/STOP and keep your arm still…</p>

A logical heading structure clearly defines the page’s main topic and organizes content into main sections. AI agents can easily generate page summaries or answer detailed queries based on this structure.

Add Alternative Text (alt-text) for Images

LLMs analyzing a webpage don’t always have advanced image recognition (like OCR or computer vision). If you want an AI agent to understand that an icon represents a “cart” or “add to favorites,” you must describe the image using alt text.

Suppose you want an AI agent – like OpenAI Operator, Proxy by Convergence, or China’s Manus – to reserve a window table away from the kitchen or book a cabin on a forested hill. In that case, you’ll need to include descriptive alt text for your images. AI agents don’t “see” images the way humans do – they have no eyes.

<img src=“table_5.png“>

<img src=”stolik_5.png” alt=“Table number 5 by the window, away from the kitchen – perfect for an intimate dinner.“>

A detailed description, including number, location, and intended use, is useful not only for screen readers but also for AI agents automating bookings.

Agents could recognize this as “extended semantic metadata,” giving them richer context. Such enhanced alt text might become a dedicated communication channel just for agents – perhaps alt text will be written not for humans at all, but exclusively for AI.

Keep Your XML Sitemap Up to Date

From the perspective of SEO, accessibility, and AI agent operation, updating your XML sitemap is essential. For SEO, it helps search engine bots better understand and index your site. For accessibility, specialized browsers use sitemaps to create simplified page views where users only see/read headers and links.

For AI agents, the benefits are even greater:

- sitemap.xml acts as a ready-made directory, letting the AI agent skip rendering and scanning each page individually. This drastically reduces response time.

- With <priority> tags, AI agents know which content is most important.

- With <lastmod> tags, agents programmed for data updates can see which content has changed. This reduces unnecessary operations and token usage for LLMs.

- Faster responses for voice assistants – An AI agent (e.g., Operator) can scan the sitemap to check if your site contains FAQs or contact info and respond quickly.

Conclusion

The shift from UX (User Experience) to AX (Agent Experience) represents a revolutionary transformation that we, as designers, must adapt to. The traditional principles of design – centered around the human user, their needs, emotions, habits, and problems—are no longer sufficient. The human will become the decision-maker in the AI agent’s workflow, while the executor of the work will be the AI agent.

Interfaces will no longer focus solely on responsive variants (desktop, tablet, mobile), or light vs. dark mode. That’s no longer enough. The interfaces we must begin designing now are no longer just tools for users – they are becoming workspaces for AI agents.

Designing for AI agents requires a completely different set of principles: more emphasis on data structure, semantics, clarity, and accessibility. Just as designing for multiple screen sizes became mandatory, designing for AI agents will be as essential. In this article, we outlined how we envision interfaces optimized for AI agents’ performance. But we also addressed how we see interfaces for humans—whose role will be fundamentally different. The human is no longer the executor, but the approver.

The role of the designer must evolve today: it’s no longer about designing the user’s action path, but the agent’s decision path, which the human simply reviews, approves, or corrects. The interface becomes a tool for supervision, understanding, and collaboration between humans and AI—not just a flat medium for clicking.