Shadow AI puts your company at risk (AI tools you should and shouldn’t use)

One of the biggest risks in your company is mostly hidden: Shadow AI = AI tools already being used by your employees without approval from IT or security teams.

Shadow AI is already in most companies, and it creates big gaps in visibility and control. And you can’t protect what you don’t know exists.

In this article, I bring together insights from two AI reports (see Sources) to tell you more about Shadow IT and what you can do about it.

Key points

|

What is Shadow IT vs Shadow AI?

Shadow IT and Shadow AI are similar in concept but apply to different technologies:

- Shadow IT: When employees use software, tools, or devices without approval from the IT department. Example: using personal Dropbox for work files.

- Shadow AI: When employees use AI tools (like Shadow GPT) without informing or getting approval from their company. This can include uploading sensitive data to public AI platforms.

I mentioned BYOAI, augmented working, and Shadow AI in one of my previous articles: 9 REAL AI trends to watch in 2025

The Shadow AI Era Is Driven by the GenAI Divide

To understand how big the Shadow AI problem is, we need to understand why employees use tools without permission. Most of them aren’t being careless. They’re using these tools because they help solve real problems and often work better than official systems.

Even though companies have spent $30–40 billion on AI solutions, 95% are seeing no value. The authors of the report call this the GenAI Divide. Many organizations test enterprise-grade AI tools, but only 5% move to full use. These tools often fail because they don’t fit into daily work, don’t learn context, or are too hard to use.

As one CIO said, “We’ve seen dozens of demos this year. Maybe one or two are useful. The rest are just wrappers or side projects.”

The scale is surprising. Only 40% of companies bought LLM subscriptions, but over 90% of workers reported using AI tools at work. Almost everyone used a generative AI tool in some way. People are bridging the GenAI gap with personal AI tools, and security teams are unaware of what’s being used.

What does it mean? Below you’ll find 5 insights from the report.

Finding 1 – The Real Threat: Risky Tools and Failing Audits

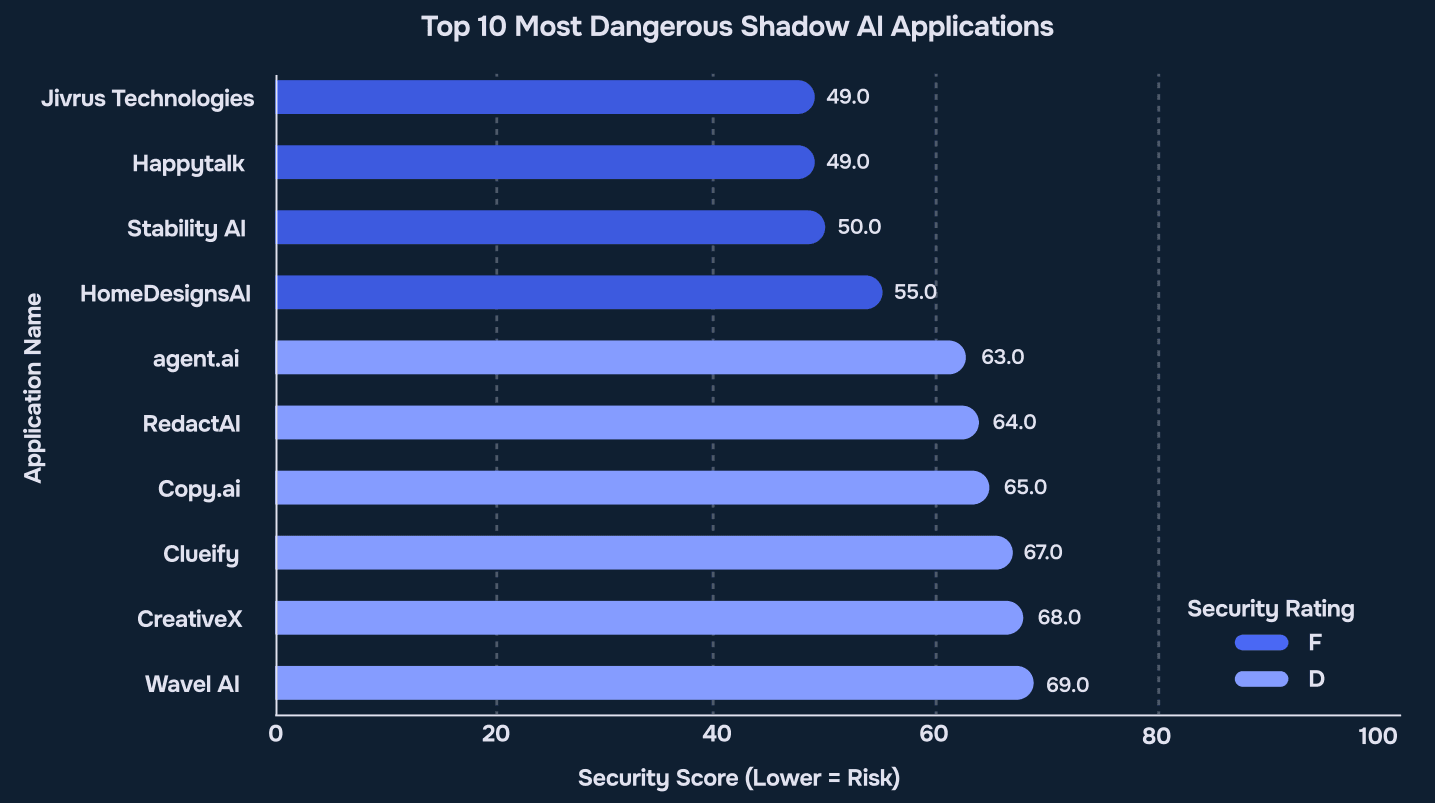

Source: Reco AI, 2025 State of Shadow AI Report, p. 9

The first finding is serious. The authors found that many high-risk AI tools are used every day to process corporate data. These tools are often not approved and have weak security.

To prove this, they:

- used internal data and SaaS audit logs to find these tools,

- checked each one for the number of users, how long people used it, how they signed in (with personal or company accounts), and if the use was visible to IT.

- ran a full Security Indicator Assessment to check key features like:

- Encryption at rest

- HTTPS

- Strong passwords

- Single sign-on (SSO)

- Audit logs

- Two-factor authentication (2FA)

Each tool was rated with Pass, Warn, or Fail. This gave the research authors an overall risk score.

Three tools – Jivrus Technologies, Happytalk, and Stability AI – failed. They had no basic protections like MFA, RBAC, or audit logs. They also had no data encryption or security certificates.

Seven more tools – like HomeDesignsAI, agent.ai, RedactAI, Copy.ai, Clueify, CreativeX, and WaveAI – got a D rating. These also had serious issues. They had weak data protection, no clear access control, and unclear data storage practices.

If your company uses any of these tools, you are likely exposing sensitive information and should act fast to remove or secure them.

Finding 2 – Popular Tools Are Not Always Safe

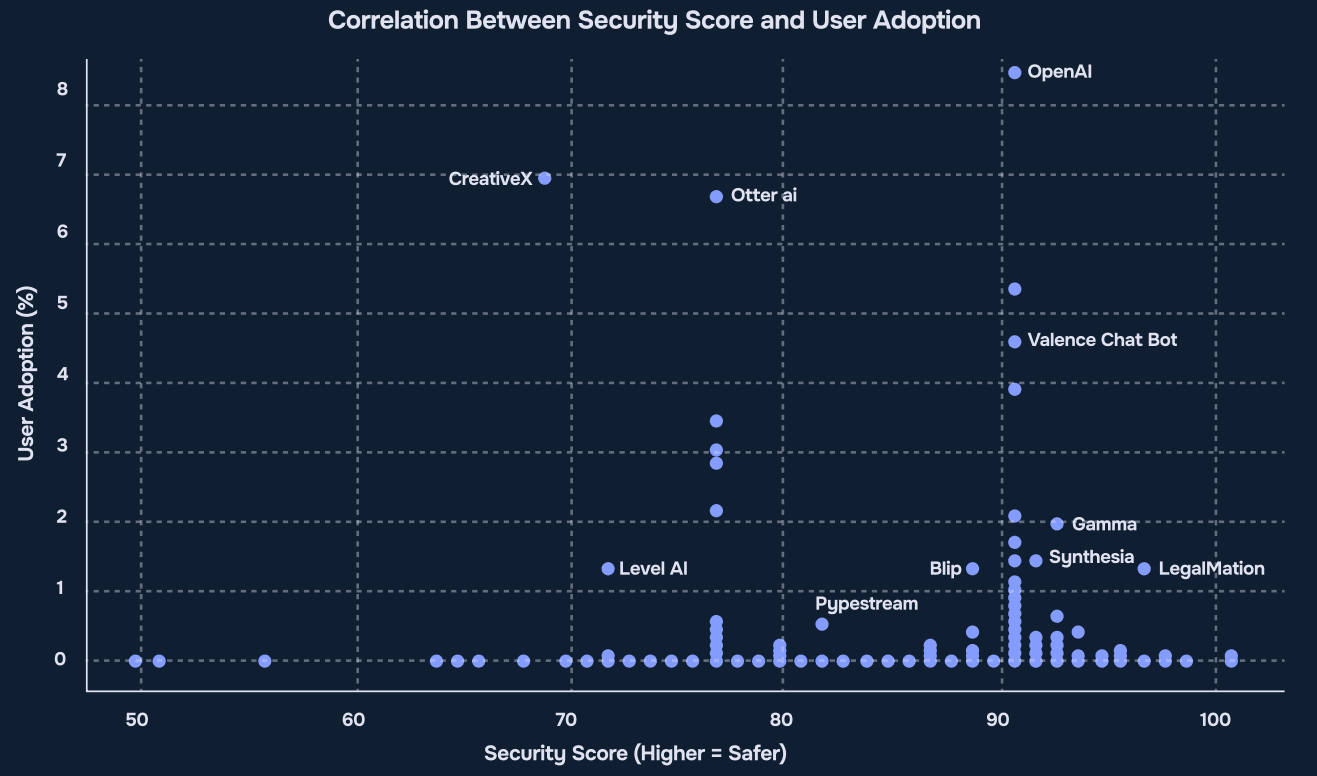

Source: Reco AI, 2025 State of Shadow AI Report, p. 11

Source: Reco AI, 2025 State of Shadow AI Report, p. 11

The second finding shows a common problem. The most used AI applications are often the least secure. Companies choose tools based on features or convenience – not security.

The report authors’ compared usage with security scores. Some tools, like CreativeX and Otter.ai, are widely used but have poor security. Still, thousands of employees use them. On the other side, secure tools like LegalMation and Gamma are used very little..

A few tools do things right. OpenAI and Valence Chat Bot both had good security scores and relatively high usage. But these are rare cases. To fix this issue, companies should publish approved tool lists, so employees pick safe options.

Finding 3 – OpenAI Is a Single Point of Risk

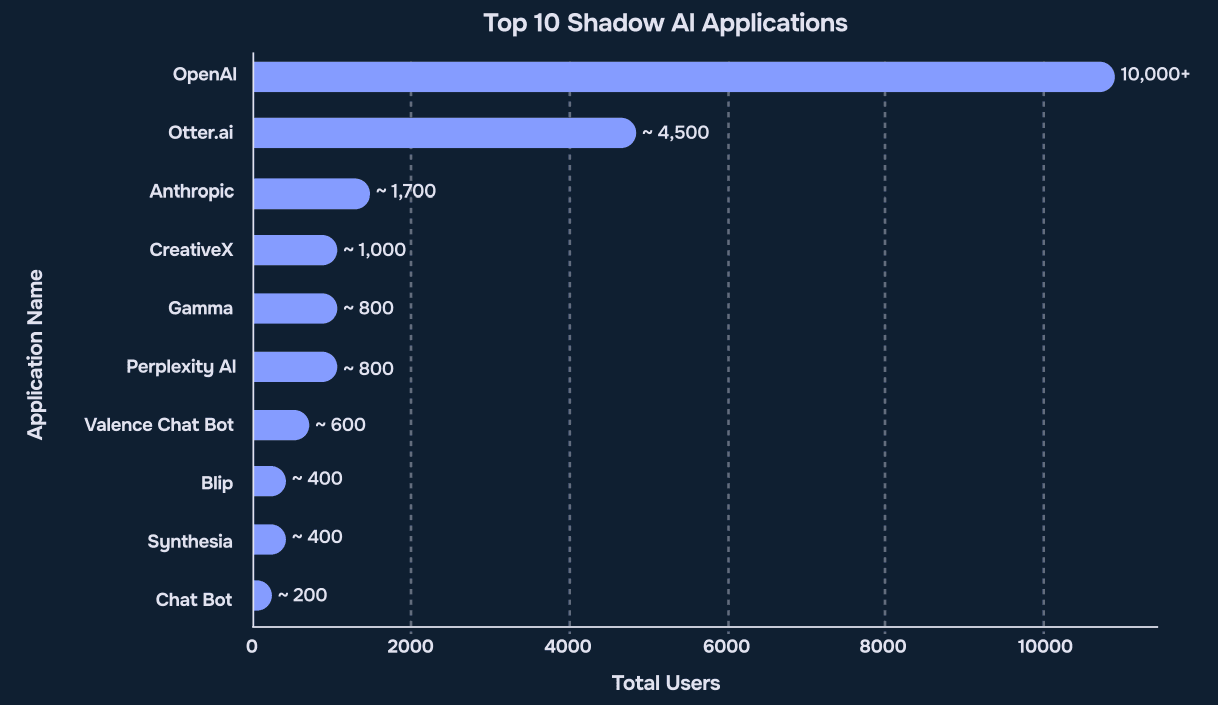

Source: Reco AI, 2025 State of Shadow AI Report, p. 13

Source: Reco AI, 2025 State of Shadow AI Report, p. 13

The third insight is about OpenAI. It now makes up 53% of all Shadow IT usage – that’s over 10,000 enterprise users using OpenAI.

This is a problem because so much corporate data flows through one tool. It handles more than the next nine tools combined. That’s why, if OpenAI has a problem, it can affect almost every team.

While teams are busy focusing on OpenAI, other tools like Valence Chat Bot, Perplexity, Blip, and Synthesia are quietly becoming more and more popular. And once more, these tools often process sensitive information without IT knowing.

What to do? You should set specific rules for OpenAI use. These include clear policies on what data can be used, which features are allowed, and who can connect it to systems like Google Drive or HubSpot.

Finding 4 – Shadow AI Tools Stay Longer Than You Think

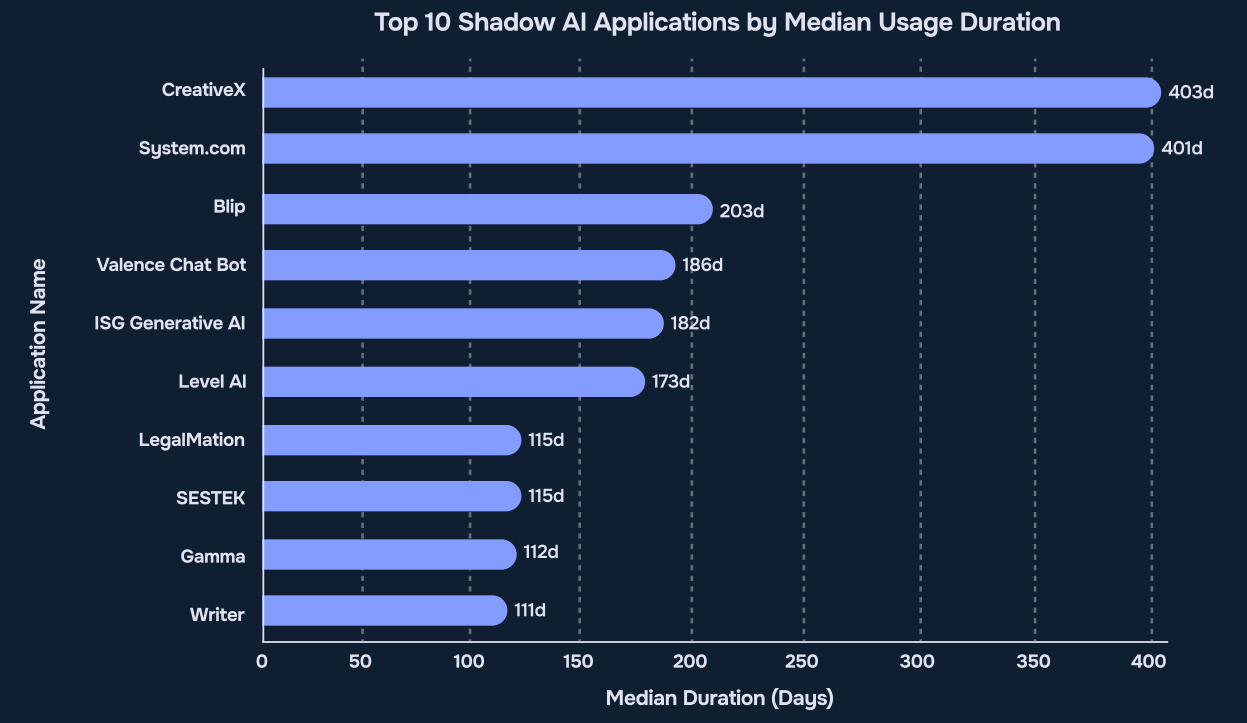

Source: Reco AI, 2025 State of Shadow AI Report, p. 15

Source: Reco AI, 2025 State of Shadow AI Report, p. 15

These Shadow AI tools don’t go away after a few days. Quite the contrary: they stay and become part of regular work.

CreativeX and System.com were used for over 400 days on average – it’s like using them literally every day for more than a year! That means they became part of daily workflows. These tools likely processed hundreds of documents, emails, or other sensitive data — and there’s no oversight.

The authors consider a tool “stopped” only if it wasn’t used for 60 days. If it’s been used longer than 60 days, it should be reviewed for formal approval or replacement.

Tools used for over 100 days are not being tested — they are part of the business. Removing them is hard because people rely on them for work. But the longer they stay, the more risk they bring. Many of them have access to emails, cloud storage, and internal systems.

What to do? Companies should audit any AI tool used for over 60 days. Either approve it with controls or replace it with a secure option.

Finding 5 – Small Companies Have Bigger Risk

Source: Reco AI, 2025 State of Shadow AI Report, p. 17

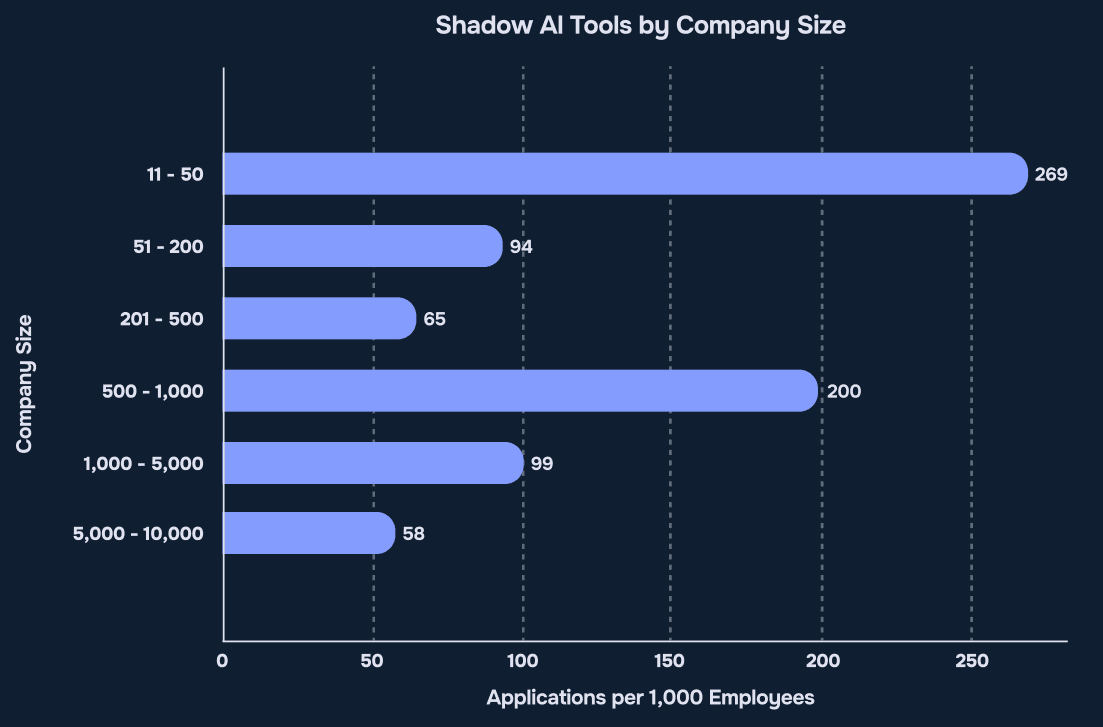

The last, fifth finding shows a surprising trend. Small and mid-size companies (SMBs) have more Shadow AI use per employee than large companies.

- In companies with 11–50 people, 27% of workers use unapproved AI tools. These companies often don’t have enough staff or tools to manage AI risks.

- Mid-size firms (501–1,000 employees) use about 200 Shadow AI tools per 1,000 workers. They face similar security rules as big companies but with fewer resources

Small companies move fast and skip long approval steps. This helps with innovation, but it also creates risk. Employees may use AI tools freely, but unconsciously expose customer data and company secrets.

What to do? If your team is small, focus on blocking or monitoring the highest-risk tools, especially ones that access email, customer data, or write code.

- Also check: Best AI tools for your business

Why Enterprise AI Tools Fail to Compete

The growth of Shadow AI is tied to the failure of official enterprise tools. The problem is not tech or people: it’s learning. Most systems don’t remember feedback, don’t improve, and don’t fit users’ needs.

This is why most enterprise AI tools don’t get used. People who use tools like ChatGPT expect memory and personalization. When comparing tools, users say consumer AI tools are better, easier, and more trustworthy.

For important work, users want tools that learn and adapt. Most say ChatGPT is great for early ideas, but for more serious tasks, 90% prefer working with a person.

This is why Shadow AI wins: it’s easy to use and gives fast results, even if it’s less secure.

What to Do Next: Focus on Governance, Don’t Ban

AI usage will keep growing. You can’t stop it, but you can manage it. Security teams need to shift to a practical approach. So, how do you detect Shadow AI?

1. Check Network Logs

If you control your company’s internet (via firewall or router), pull logs and look for traffic to AI services like:

- openai.com

- chat.openai.com

- bard.google.com

- claude.ai

- midjourney.com

Ask your IT admin or MSP to help if needed.

2. Install DNS Monitoring

Set up a DNS filtering tool (like Cisco Umbrella, Cloudflare Gateway, or NextDNS). It shows you what sites your employees visit, even in incognito mode. Look for repeated hits on AI-related domains.

3. Use Endpoint Monitoring

Install endpoint agents (like CrowdStrike, SentinelOne, Microsoft Defender for Endpoint). These show:

- Apps and browser extensions used

- Clipboard activity

- File uploads or unusual behavior

Flag any use of AI tools.

4. Run an Employee Survey

Keep it short and open, but don’t frame it as a threat:

- “Do you use any AI tools for your work?”

- “Which tools?”

- “What tasks do you use them for?”

5. Check Outputs

Review work output:

- Sudden changes in writing tone or speed

- Repetitive use of phrases

- Fast generation of content or code

If something feels too fast or too polished, it might be AI-assisted.

And once you’ve identified the AI tools that are used “in shadow”, here are five actions to take:

- Create OpenAI Rules: Since OpenAI is used the most, make clear rules for data, approved uses, and system connections like HubSpot or Google Drive.

- Share a List of Approved Tools: Most employees pick tools based on features. Help them choose safe ones by publishing a list of trusted tools for tasks like writing or coding.

- Fix High-Risk Tools First: Act fast on tools with F grades (Jivrus Technologies, Happytalk, Stability AI). Review any tool used for over 90 days. Approve it with controls or replace it.

- Help Small Teams: If you have a small team, whitelist 3–5 trusted AI tools instead of trying to manage dozens. Use a “default deny” rule for all others.

Or, if you’d like it more “formal” way, you can follow ISO/IEC 42001:2023 guidelines – the first international standard aimed at establishing an AI Management System (AIMS) – i.e. a structured, auditable framework for managing AI risk, oversight, and continuous improvement. You must remember though that ISO 42001is a management standard, not a product specification or technical checklist.

How we deal with Shadow AI at Pragmatic Coders

At Pragmatic Coders, we automate lots of tasks both for our back-office and for client projects using a protected Zapier account. For more complex automations, we use language models from proven vendors like ChatGPT or Meta, and self-host n8n so that we’re sure the client’s data is always safe. This approach lets our back-office teams work in an innovative and, at the same time, secure way.

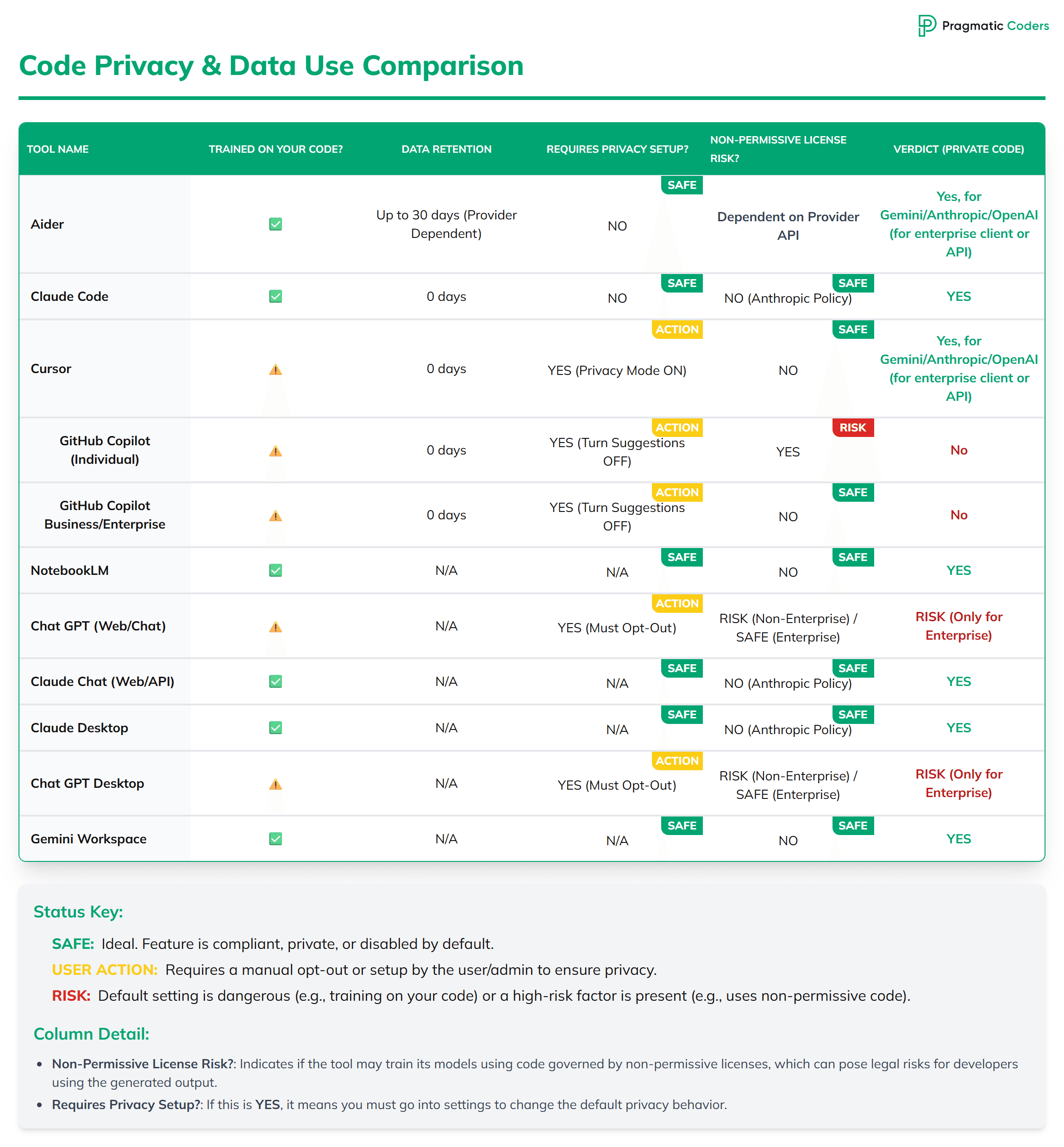

For back-office AND client projects, we created a table comparison for 11 AI tools. Feel free to save the image below to check whether a tool is safe or not for you to use:

Bottom line

Now is the time to act. If you want to build your app with a software partner who REALLY keeps their clients’ data secure, let’s talk now.

Sources

Here are the sources used when writing this article.

FAQ

What is Shadow AI?

Shadow AI refers to the AI tools (like generative AI chatbots or browser extensions) that employees use for work tasks without the official approval, knowledge, or oversight of the company’s IT or security teams.

How is Shadow AI different from Shadow IT?

- Shadow IT is a broader term for employees using any unauthorized software, tools, or devices (e.g., using a personal Dropbox for work files).

- Shadow AI is a specific type of Shadow IT focused on the unauthorized use of AI tools, which introduces unique risks related to how AI models handle and process corporate data.

What are the main risks of Shadow AI?

The biggest risks are:

- Data Leakage: Employees may upload sensitive, proprietary, or customer data to public AI platforms, where security teams cannot control how it’s stored or used.

- Security Vulnerabilities: Many unapproved tools fail basic security checks (like two-factor authentication, encryption, and audit logs), making them a high-risk entry point for attackers.

- Compliance Issues: Using unvetted tools can violate data protection regulations like GDPR or HIPAA.

- Single Point of Failure: With one tool (like OpenAI) making up over half of all Shadow AI use, a breach in that one tool could affect almost every team.

How widespread is Shadow AI?

The problem is extensive:

- Over 90% of employees report using AI tools at work, often without approval.

- OpenAI (including ChatGPT) is the most-used Shadow AI tool, accounting for 53% of all usage.

- In companies with 11–50 people, 27% of workers use unapproved AI tools, showing that small companies face a larger risk per employee.

Why do employees use unapproved AI tools (Shadow AI)?

Employees use them because they are often more effective, easier to use, and provide faster results than official, enterprise-grade systems, which often fail to fit into daily work or lack the memory and personalization users expect. This phenomenon is called the GenAI Divide.

Should we block or ban all AI tools to eliminate the risk?

No. Banning AI tools is generally counterproductive and impractical, as employees will find workarounds, and the company will lose out on productivity gains. The recommended approach is to manage the risk through governance, tracking, clear rules, and approving secure options.

What are the first steps my company should take to manage Shadow AI?

You should focus on detection and governance:

- Detect Usage: Check network logs, install DNS monitoring, use endpoint monitoring tools, and run a non-threatening employee survey.

- Act on Findings:

- Create specific rules for the most-used tool, like OpenAI.

- Publish a list of approved, secure AI tools to guide employees.

- Prioritize fixing or removing tools with ‘Fail’ grades in security audits first (e.g., Jivrus Technologies, Happytalk, Stability AI).

- Audit any AI tool used for over 60 days, as these are likely integrated into daily business.

What can small companies do to manage Shadow AI with fewer resources?

Small and mid-size businesses face a bigger risk per employee. Instead of trying to manage dozens of tools, small teams should whitelist 3–5 trusted AI tools and apply a “default deny” rule for all others, especially for tools that handle sensitive information like customer data or code.

Which AI assistants are the safest to use for handling private code?

The tools that offer the highest level of default safety and privacy for handling private code are:

- Claude Code

- Claude Chat (Web/API)

- Claude Desktop

- Gemini Workspace

- NotebookLM

Why these tools are considered the safest:

- Training on Your Code: All of them have a green checkmark ($\checkmark$), meaning they are not used to train the underlying AI models, which is the biggest risk for proprietary code.

- Privacy Setup: The strongest privacy protections are applied by default and require no or minimal setup from the user (“SAFE” for required setup).

- License Risk: They have clear policies that prevent them from introducing non-permissive license risks into your codebase.